This

This

Logging Data in PowerFlow

PowerFlow allows you to view log data locally, remotely, or through Docker.

Local Logging

PowerFlow writes logs to files on a host system directory. Each of the main components, such as the process manager or Celery workers, and each application that is run on the platform, generates a log file. The application log files use the application name for easy consumption and location.

In a clustered environment, the logs must be written to the same volume or disk being used for persistent storage. This ensures that all logs are gathered from all hosts in the cluster onto a single disk, and that each application log can contain information from separately located, disparate workers.

You can also implement log features such as rolling, standard out, level, and location setting, and you can configure these features with their corresponding environment variable or setting in a configuration file.

Although it is possible to write logs to file on the host for persistent debug logging, as a best practice, ScienceLogic recommends that you utilize a logging driver and write out the container logs somewhere else.

You can use the "Timed Removal" application in the PowerFlow user interface to remove logs from Couchbase on a regular schedule. On the Configuration pane for that application, specify the number of days before the next log removal. For more information, see Removing Logs on a Regular Schedule.

Remote Logging

If you use your own existing logging server, such as Syslog, Splunk, or Logstash, PowerFlow can route its logs to a customer-specified location. To do so, attach your service, such as logspout, to the microservice stack and configure your service to route all logs to the server of your choice.

Although PowerFlow supports logging to these remote systems, ScienceLogic does not officially own or support the configuration of the remote logging locations. Use the logging to a remote system feature at your own discretion.

Viewing Logs in Docker

You can use the Docker command line to view the logs of any current running service in the PowerFlow cluster. To view the logs of any service, run the following command:

docker service logs -f iservices_<service_name>

Some common examples include the following:

docker service logs –f iservices_couchbase

docker service logs –f iservices_steprunner

docker service logs –f iservices_contentapi

Application logs are stored on the central database as well as on all of the Docker hosts in a clustered environment. These logs are stored at /var/log/iservices for both single-node or clustered environments. However, the logs on each Docker host only relate to the services running on that host. For this reason, using the Docker service logs is the best way to get logs from all hosts at once.

Logging Configuration

The following table describes the variables and configuration settings related to logging in PowerFlow:

| Environment Variable/Config Setting | Description | Default Setting |

|---|---|---|

| logdir | The directory to which logs will be written. | /var/log/iservices |

| stdoutlog | Whether logs should be written to standard output (stdout). | True |

| loglevel | Log level setting for PowerFlow application modules. | debug/info (varies between development and product environments) |

| celery_log_level | The log level for Celery-related components and modules. | debug/info (varies between development and product environments) |

PowerFlow Log Files

In PowerFlow version 2.1.0 and later, additional logging options were added for gui, api, and rabbitmq services. The logs from a service are available in the /var/log/iservices directory on which that particular service is running.

To aggregate logs for the entire cluster, ScienceLogic recommends that you use a tool like Docker Syslog: https://docs.docker.com/config/containers/logging/syslog/.

Logs for the gui Service

By default all nginx logs are written to stdout. Although not enabled by default, you can also choose to write access and error logs to file in addition to stdout.

To log to a persistent file, simply mount a file to /host/directory:/var/log/nginx, which will by default log both access and error logs.

For pypiserver logs, the logs are not persisted to disk by default. You can choose to persist pypiserver logs by setting the log_to_file environment variable to true.

If you choose to persist logs, you should mount a host volume to /var/log/devpi to access logs from a hos. For example: /var/log/iservices/devpi:/var/log/devpi.

Logs for the api Service

By default all log messages related to PowerFlow are written out to /var/log/iservices/contentapi.

PowerFlow-specific logging is controlled by existing settings listed in Logging Configuration. Although not enabled by default, you can also choose to write nginx access and error logs to file in addition to stdout.

To log to a persistent file, simply mount a file to /file/location/host:/var/log/nginx/nginx_error.log or /host/directory:/var/log/nginx depending if you want access or error logs.

Logs for the rabbitmq Service

Logs for the rabbitmq service were not previously persisted, but with PowerFlow 2.1.0, all rabbitmq service logs are written to stdout by default. You can choose write to a logfile (stdout or logfile, not both).

To write to the logfile:

-

Add the following environment variable for the rabbitmq service:

RABBITMQ_LOGS: "/var/log/rabbitmq/rabbit.log"

-

Mount a volume: /var/log/iservices/rabbit:/var/log/rabbitmq

The retention policy of these logs is 10 MB, for a total of five maximum logs written to file.

Working with Log Files

Use the following procedures to help you locate and understand the contents of the various log files related to PowerFlow.

Accessing Docker Log Files

The Docker log files contain information logged by all containers participating in PowerFlow. The information below is also available in the PowerPacks listed above.

To access Docker log files:

-

Use SSH to connect to the PowerFlow instance.

-

Run the following Docker command:

docker service ls

-

Note the Docker service name, which you will use for the <service_name> value in the next step.

-

Run the following Docker command:

docker service logs -f <service_name>

Accessing Local File System Logs

The local file system logs display the same information as the Docker log files. These log files include debug information for all of the PowerFlow applications and all of the Celery worker nodes.

To access local file system logs:

- Use SSH to connect to the PowerFlow instance.

- Navigate to the /var/log/iservices directory to view the log files.

Understanding the Contents of Log Files

The pattern of deciphering log messages applies to both Docker logs and local log files, as these logs display the same information.

The following is an example of a message in a Docker log or a local file system log:

"2018-11-05 19:02:28,312","FLOW","12","device_sync_sciencelogic_to_servicenow","ipaas_logger","142","stop Query and Cache ServiceNow CIs|41.4114570618"

You can parse this data in the following manner:

'YYYY-MM-DD' 'HH-MM-SS,ss' 'log-level' 'process_id' 'is_app_name' 'file' 'lineOfCode' 'message'

To extract the runtime for each individual task, use regex to match on a log line. For instance, in the above example, there is the following sub-string:

"stop Query and Cache ServiceNow CIs|41.4114570618"

Use regex to parse the string above:

"stop …… | …"

where:

- Everything after the | is the time taken for execution.

- The string between "stop" and | represents the step that was executed.

In the example message, the "Query and Cache ServiceNow CIs" step took around 41 seconds to run.

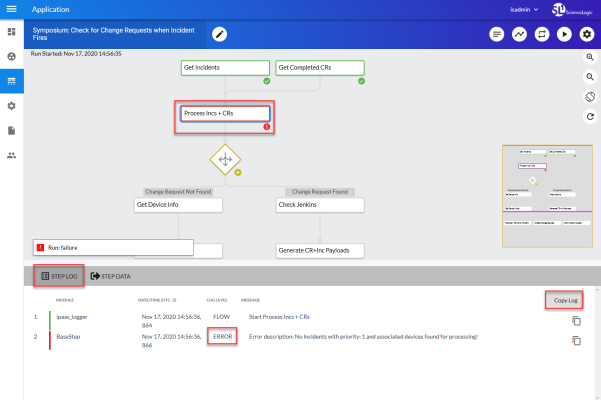

Viewing the Step Logs and Step Data for a PowerFlow Application

The tab on an Application page displays the time, the type of log, and the log messages from a step that you selected in the main pane. All times that are displayed in the Message column of this pane are in seconds, such as "stop Query and Cache ServiceNow CI List|5.644190788269043".

You can view log data for a step on the tab while the Configuration pane for that step is open.

To view logs for the steps of an application:

- From the tab, select an application. The Application page appears.

- Select a step in the application.

-

Click the tab in the bottom left-hand corner of the screen. The tab appears at the bottom of the page, and it displays log messages for the selected step:

For longer log messages, click the down arrow icon (

) in the Message column of the tab to open the message.

- To copy a message, triple-click the text of the message to highlight the entire text block, and then click the Copy Message icon (

) next to that message.

) next to that message. - To copy the entire log, click the button.

- Click the tab to display the JSON data that was generated by the selected step.

- Click the tab to close the tab.

- To generate more detailed logs when you run this application, hover over (

) and select Debug Run.

Log information for a step is saved for the duration of the result_expires setting in the PowerFlow system. The result_expires setting is defined in the opt/iservices/scripts/docker-compose.yml file. The default value for log expiration is 7 days. This environment variable is set in seconds.

Removing Logs on a Regular Schedule

The "Timed Removal" application in the PowerFlow user interface lets you remove logs from Couchbase on a regular schedule.

To schedule the removal of logs:

- In the PowerFlow user interface, go to the tab and select the "Timed Removal" application.

- Click the button (

). The Configuration pane appears.

- Complete the following fields:

- Configuration. Select the relevant configuration object to align with this application. Required.

- time_in_days. Specify how often you want to remove the logs from Couchbase. The default is 7 days. Required.

- remove_dex_sessions. Select this option if you want to remove Dex Server sessions from the logs.

- Click the button and close the Configuration pane.

- Click the button (

) to run the "Timed Removal" application.