This

Use the following menu options to navigate the Skylar One user interface:

- To view a pop-out list of menu options, click the menu icon (

).

). - To view a page containing all of the menu options, click the Advanced menu icon (

).

).

Prerequisites

Before performing the steps listed in this

- Install and license each appliance

- Have an Administrator account to log in to the Web Configuration Utility for each appliance

- Have SSH or console access to each appliance

- Know the em7admin console username and password for each appliance

- Have identical hardware or virtual machine specifications on each appliance

- Have configured a unique hostname on each appliance

- Know the MariaDB username and password

- Know the MariaDB username and password

- (Option 1) ScienceLogic recommends that you connect the two appliances on a secondary interface with a crossover cable.

- When using a crossover cable a virtual IP is required for the cluster.

- (Option 2) Connect the two appliances on a secondary interface using a dedicated private network (VLAN). This network should only be used for cluster communication. If a crossover cable is not possible, you must have this private network for cluster communication containing only the two high availability nodes.

- A virtual IP is optional when using this method.

- One Admin Portal is required to be configured as a quorum witness.

- Latency between the nodes must be less than 1 millisecond.

- Nodes can optionally be placed in separate availability zones, but must be in the same region.

- You can determine the virtual IP address for the cluster, if required. The virtual IP address will be associated with the primary appliance and will be transitioned between the appliances during failover and failback. The virtual IP address must be on the same network subnet as the primary network adapters of the appliances.

See Deployment Recommendations for details regarding availability zones and regions.

Secure boot is not supported for clustered Skylar One configurations like HA and DR.

Unique Hostnames

You must ensure that a unique hostname is configured on each Skylar One appliance. The hostname of an appliance is configured during the initial installation. To view and change the hostname of an appliance:

- Log in to the console of the Skylar One appliance as the em7admin user. The current hostname appears before the command-prompt. For example, the login prompt might look like this, with the current hostname highlighted in bold:

- To change the hostname, run the following command:

- When prompted, enter the password for the em7admin user.

login as: em7admin

em7admin@10.64.68.31's password:

Last login: Wed Apr 27 21:25:26 2016 from silo1651.sciencelogic.local

[em7admin@HADB01 ~]$

sudo hostnamectl set-hostname <new hostname>

Addressing the Cluster

A database cluster has three IP addresses: one for the primary interface on each database appliance, and an additional virtual IP address. The virtual IP address is shared between the two database appliances, to be used by any system requesting database services from the cluster.

The following table describes which IP address you should supply for the Database Server when you configure other Skylar One appliances and external systems:

| Appliance/System | IP Address |

| Administration Portal | Use the Virtual IP address when configuring the Database IP Address in the Web Configuration Utility and the Appliance Manager page (System > Settings > Appliances). |

| Data Collector or Message Collector | Include both primary interface IP addresses when configuring the ScienceLogic Central Database IP Address in the Web Configuration Utility and the Appliance Manager page (System > Settings > Appliances). |

| SNMP Monitoring | Monitor each Database Server separately using the primary interface IP addresses. |

| Database Dynamic Applications | Use the Virtual IP address in the Hostname/IP field in the Credential Editor page (System > Manage > Credentials > wrench icon). |

Configuring Heartbeat IP Addresses

To cluster two databases, you must configure a heartbeat network between the appliances first. The heartbeat network is used by the databases to determine whether failover conditions have occurred. A heartbeat network consists of a crossover Ethernet cable attached to an interface on each database.

After attaching the network cable, you must complete the steps described in this section to configure the heartbeat network.

Perform the following steps on each appliance to configure the heartbeat network:

- Log in to the console of the Primary appliance as the em7admin user.

- Run the following command:

- Select Network Configuration.

- Select the interface you want to configure.

-

Edit the configuration. Minimally, you will need to set or edit the following entries. Other entries can remain at the default value.

IPADDR=”169.254.1.1” PREFIX=”30” BOOTPROTO=”none” ONBOOT=”yes” -

After you finish your edits, press and select <Save>.

-

A prompt to restart networking to activate your changes appears. Select <Save>.

- Log in to the console of the Secondary appliance as the em7admin user and repeat steps 2-5, using 169.254.1.2 as the IP address.

sudo /usr/local/bin/slmenu

Testing the Heartbeat Network

After you configure the heartbeat network, perform the following steps to test the connection:

- Log in to the console of the Primary appliance as the em7admin user.

- Run the following command:

ping -c4 169.254.1.2

If the heartbeat network is configured correctly, the output looks like this:

PING 169.254.1.2 (169.254.1.2) 56(84) bytes of data.

64 bytes from 169.254.1.2: icmp_seq=1 ttl=64 time=0.657 ms

64 bytes from 169.254.1.2: icmp_seq=2 ttl=64 time=0.512 ms

64 bytes from 169.254.1.2: icmp_seq=3 ttl=64 time=0.595 ms

64 bytes from 169.254.1.2: icmp_seq=4 ttl=64 time=0.464 ms

If the heartbeat network is not configured correctly, the output looks like this:

PING 169.254.1.2 (169.254.1.2) 56(84) bytes of data.

From 169.254.1.1 icmp_seq=1 Destination Host Unreachable

From 169.254.1.1 icmp_seq=2 Destination Host Unreachable

From 169.254.1.1 icmp_seq=3 Destination Host Unreachable

From 169.254.1.1 icmp_seq=4 Destination Host Unreachable

Deployment Recommendations

ScienceLogic strongly recommends that you use a 10 GB network connection for the cluster communication link. Using a 1 GB link may not be suitable and lead to reduced database performance.

When deploying in a cloud environment the quorum witness must be placed in a different availability zone from the High Availability servers.

When deploying in a cloud environment the High Availability servers should be placed in separate availability zones and must not be in the same zone with the quorum witness.

Configuring High Availability

This section describes how to configure the Quorum Witness, Primary appliance, and the Secondary appliance for High Availability.

Configuring the Quorum Witness

A quorum witness is required in any setup that does not use a crossover cable. Two-node clusters without a crossover cable are known to be unstable. The quorum witness provides the needed "tie-breaking" vote to avoid split-brain scenarios.

Only one administration portal can be configured as a quorum witness. Please review the requirements for network placement and availability zones.

Prior to starting this procedure ensure the Admin Portal is connected to the primary database and licensed. To configure the quorum witness:

-

Log in to the console of the administrative portal appliance that will be the quorum witness as the em7admin user.

-

Run the following command:

sudo -s

-

Enter the em7admin password when you are prompted.

-

Run the following command:

silo-quorum-install

The following prompt appears:

This wizard will assist you in configuring this admin portal to be a quorum device for a corosync/pacemaker HA or HA+DR cluster. When forming a cluster that does not have a cross over cable a quorum device is required to prevent split-brain scenarios. Please consult ScienceLogic documentation on the requirements for a cluster setup.

Do you want to configure this node a quorum device for an HA or HA+DR cluster? (y/n)

-

Enter yes and the following prompt appears:

-Please enter the PRIMARY IP address for the Primary HA server:

-

Enter the primary IP of the primary high availability server and press Enter. The following prompt will appear:

-Please enter the PRIMARY IP address for the Secondary HA server:

-

Enter the primary IP of the secondary high availability server and press Enter. The following confirmation will appear:

Please check the following IP addresses are correct

Node 1 Primary IP: <primary HA primary IP>

Node 2 Primary IP: <secondary HA primary IP>

Is this architecture correct? (y/n)

-

Enter yes if your architecture is correct and the following messages will indicate the quorum witness is being setup.

Updating firewalld configuration, please be patient...

Waiting for quorum service to start...

Service has started successfully.

silo-quorum-install has exited

After this setup is complete you can configure the primary appliance.

Configuring the Primary Appliance

To configure the Primary appliance for High Availability, perform the following steps:

- Log in to the console of the Primary appliance as the em7admin user.

- Run the following command:

- When prompted, enter the password for the em7admin user.

- Run the following command:

- Enter "1". The following prompt appears:

- Enter yes. The following prompt appears:

- Enter yesif there is a physical crossover cable connecting the HA nodes and skip to step 10. Otherwise answer no. When answering no, the following prompt appears:

- A dedicated private network link is required. If you answer no, the script will exit. Answer yes and the following prompt appears:

- You must have already configured an Admin Portal to act as a quorum witness. If you answer no, the script will exit. Answer yes and the following prompt appears:

- Enter the IP address for the Admin Portal that was configured for as the quorum witness and press Enter. The following prompt appears:

- Select the response that most closely matches the speed of the private link between HA nodes.

- If you are using a crossover cable skip to step 14. If you're not using a crossover cable, the following prompt appears:

- Enter yes if you want to use a VIP, otherwise, enter no.

- The following confirmation will appear:

- Verify that your architecture is correct. If not, enter no to reenter the data, otherwise enter yes. The following prompt appears:

- Select the IP and the following prompt appears:

sudo -s

silo-cluster-install

The following prompt appears:

v3.7

This wizard will assist you in setting up clustering for ScienceLogic appliance. Please be sure to consult the ScienceLogic documentation before running this script to ensure that all prerequisites have been met.

1) HA

2) DR

3) HA+DR

4) Quit

Please select the architecture you'd like to setup:

Will this system be the primary/active node in a new HA cluster? (y/n)

Is there a cross-over cable between HA nodes? (y/n)

Is there a dedicated/private network link between the HA nodes? (y/n)

Have you already configured an AP to act as a quorum device? (y/n)

Please enter the IP for the quorum device:

Choose the closest value for the speed of the dedicated between HA nodes.

1) 10Gbps

2) 1 Gbps

What is the physical link speed between HA nodes?

Would you like to use a Virtual IP (VIP)? (y/n)

Review the following architecture selections to ensure they are correct

Architecture: HA

Current node hostname: db1

Crossover Cable: No

Dedicated link speed: <the value of the speed you chose>

Quorum Node IP: <IP address of your quorum witness>

Virtual IP (VIP) used: Yes

Is this architecture correct? (y/n)

Primary node information:

Please the IP used for HEARTBEAT traffic for this server:

1)10.255.255.1

...

Number:

Secondary node information:

What is the hostname of the Secondary HA server:

- Enter the hostname of the secondary server press . The following prompt appears:

- Enter the corresponding IP and press . The following prompt appears:

- Enter the primary IP for the secondary high availability server and press .

- If you are using a VIP, the following prompt appears:

- Enter the VIP address and CIDR mask.

- The following confirmation will appear:

- Review your information and ensure it is correct. Enter yes, if correct.

- Once confirmed, the system will configure itself and you can expect an output similar to the following:

- The messages above are examples of a successful setup. The important messages that indicate a successful setup are:

- If your setup did not complete successfully with the cluster services in a starting state, you will need to review the log to determine the cause of the failure. Once you correct the issues found in the log the set up can be run again.

Please enter the IP used for HEARTBEAT traffic for the Secondary HA server that corresponds to 10.255.255.1

Please enter the PRIMARY IP address for the Secondary HA server:

Virtual IP address information:

Please enter the Virtual IP Address:

Please enter the CIDR for the Virtual IP without the / (example: 24):

You have selected the following settings, please confirm if they are correct:

Architecture: HA

This Node: PRIMARY (Node 1)

Node 1 Hostname: db1

Node 1 DRBD/Heartbeat IP: 10.255.255.1

Node 1 Primary IP: 10.64.166.251

Node 2 Hostname: tea

Node 2 DRBD/Heartbeat IP: 10.225.255.2

Node 2 Primary IP: 10.64.166.252

Virtual IP: 10.64.167.0/23

DRBD Disk: /dev/mapper/em7vg-db

DRBD Proxy: No

Is this information correct? (y/n)

Setting up the environment...

MariaDB running, sending shutdown

Pausing Skylar One services

Adjusting /dev/mapper/em7vg-db by extending to 39464 extents to accommodate filesystem and metadata

-Updating firewalld configuration, please be patient...

Setting up DRBD...

Setting up and starting Corosync...

Setting up and starting Pacemaker...

Waiting on cluster services to come up...

Waiting on cluster services to come up...

Waiting on cluster services to come up...

Cluster services detected as active

Configuring silo.conf for clustering

Unpausing Skylar One

Setup is complete

Current cluster status

Stack: corosync

Current DC: db1 (version 1.1.19.linbit-8+20181129.el7.2-c3c624ea3d) - partition with quorum

Last updated: <date and time you last updated>

Last change: <date and time you last changed> by hacluster via crmd on db1

2 nodes configured

5 resources configured

Online: [ db1 ]

OFFLINE: [ db2 ]

Active resources:

Resource Group: g_em7

p_fs_drbd1 (ocf::heartbeat:Filesystem): Started db1

mysql (ocf::sciencelogic:mysqul-systemd): Started db1

virtual_ip (ocf::heartbeat:IPaddr2): Started db1

Master/Slave Set: ms_drbd_r0 [p_drbd_r0]

Masters: [ db1 ]

Current DRBD status

r0 role:Primary

disk:UpToDate

peer connection:Connecting

silo-cluster-install has exited

Unpausing Skylar One

Setup is complete

Current cluster status

This message indicates that the system has been configured and the required clustering services have started successfully. You can review the output of the cluster status to see if all services have started as expected.

Configuring the Secondary Appliance

To configure the Secondary appliance for High Availability, perform the following steps:

- Log in to the console of the Secondary appliance as the em7admin user.

- Run the following command to assume root user privileges:

- When prompted, enter the password for the em7admin user.

- Run the following command:

- Enter "1". The following prompt appears:

- Enter no. The following prompt appears:

- Enter yes if you have already configured the primary node and it is active and running. Otherwise, choose no and complete the setup on the primary node. The following prompt appears:

- After entering yes the following prompt appears:

- Enter the IP address of the primary system and press . This node will attempt to connect to the primary system and retrieve the cluster configuration information. If a successful connection can't be established, you will be prompted again to enter the IP address and a username and a password. The username and password should be the same used for connecting to Maria DB.

- When a successful connection is made you will be prompted to confirm the architecture to ensure that it is the same for the primary server.

- Review that the information is correct and enter yes. Enter no if it is incorrect and regenerate the configuration on the primary/active system before proceeding.

- After you enter yes, the architecture will be validated for any errors and you will be prompted to confirm the configuration. Review that the information is correct. Your output should be similar to the following:

- Review that the information is correct and enter yes. Enter no if it is incorrect and regenerate the configuration on the primary/active system before proceeding.

sudo -s

silo-cluster-install

The following prompt appears:

v3.7

This wizard will assist you in setting up clustering for a ScienceLogic appliance. Please be sure to consult the ScienceLogic documentation before running this script to ensure that all prerequisites have been met.

1) HA

2) DR

3) HA+DR

4) Quit

Please select the architecture you'd like to setup:

Will this system be the primary/active node in a new HA cluster? (y/n)

Has the primary/active system already been configured and is it running? (y/n)

Enter an IP for the primary system that is reachable by this node:

Review the following architecture selections to ensure they are correct

Architecture: HA

Current node hostname: db2

Crossover Cable: No

Dedicated link speed: 10Gbps

Quorum Node IP: 10.64.166.250

Virtual IP (VIP) used: Yes

Is this architecture correct? (y/n)

You have selected the following settings, please confirm if they are correct:

Architecture: HA

This Node: SECONDARY (Node 2)

Node 1 Hostname: db1

Node 1 DRBD/Heartbeat IP: 10.255.255.1

Node 1 Primary IP: 10.64.166.251

Node 2 Hostname: db2

Node 2 DRBD/Heartbeat IP: 10.255.255.2

Node 2 Primary IP: 10.64.166.252

Virtual IP: 10.64.167.0/23

DRBD Disk: /dev/mapper/em7vg-db

DRBD Proxy: No

Is this information correct? (y/n)

- After you enter yes, the following message indicates the system is being configured:

Setting up the environment...

Maria DB running, sending shutdown

Pausing Skylar One services

Adjusting /dev/mapper/em7vg-db by extending to 39464 extents to accommodate filesystems and metadata

-Updating firewalld configuration, please be patient...

Setting up DRBD...

Setting up and starting Corosync...

Setting up starting Pacemaker...

Waiting on cluster services to come up...

Waiting on cluster services to come up...

Waiting on cluster services to come up...

Cluster services detected as active

Configuring silo.conf for clustering

Unpausing Skylar One

Setup is complete. Please monitor the DRBD synchronization status (drbdadm status)

Failover cannot occur until DRBD is fully synced

Current cluster status

Stack: corosync

Current DC: db1(version 1.1.19.linbit-8+20181129.el7.2-c3c624ea3d) - partition with quorum

Last updated:<date and time you last updated>

Last change:<date and time you last changed> by hacluster via crmd on db1

2 nodes configured

5 resources configured

Online: [ db1 db2 ]

Active resources:

Resource Group: g_em7

p_fs_drdb1 (ocf::heartbeat:Filesystem): Started db1

mysql (ocf::sciencelogic:mysql-systemd): Started db1

virtual_ip (ocf::heartbeat:IPaddr2): Started db1

Master/Slave Set: ms_drbd_r0 [p_drbd_r0]

Masters: [ db1 ]

Slaves: [ db2 ]

Current DRBD status

r0 role:Secondary

disk:Inconsistent

peer role:Primary

replication:SyncTarget peer-disk:UpToDate done:8.91

silo-cluster-install has exited

Licensing the Secondary Appliance

Perform the following steps to license the Secondary appliance:

- You can log in to the Web Configuration Utility using any web browser supported by Skylar One. The address of the Web Configuration Utility is in the following format:

https://<ip-address-of-appliance>:7700

Enter the address of the Web Configuration Utility in to the address bar of your browser, replacing "ip-address-of-appliance" with the IP address of the Secondary appliance.

- You will be prompted to enter your username and password. Log in as the "em7admin" user with the password you configured using the Setup Wizard.

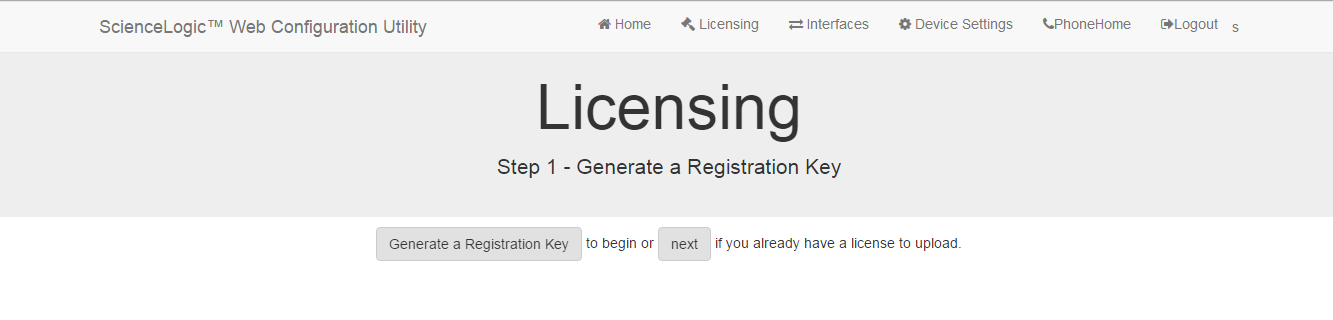

- The Configuration Utilities page appears. Click the button. The Licensing Step 1 page appears:

- Click the button.

- When prompted, save the Registration Key file to your local disk.

- Log in to the ScienceLogic Support Center and go to the ScienceLogic Product Licensing page (Support > License & Image Requests).

- Under Skylar One, click the button and follow the instructions for requesting a license key. ScienceLogic will provide you with a License Key file that corresponds to the Registration Key file.

- Return to the Web Configuration Utility:

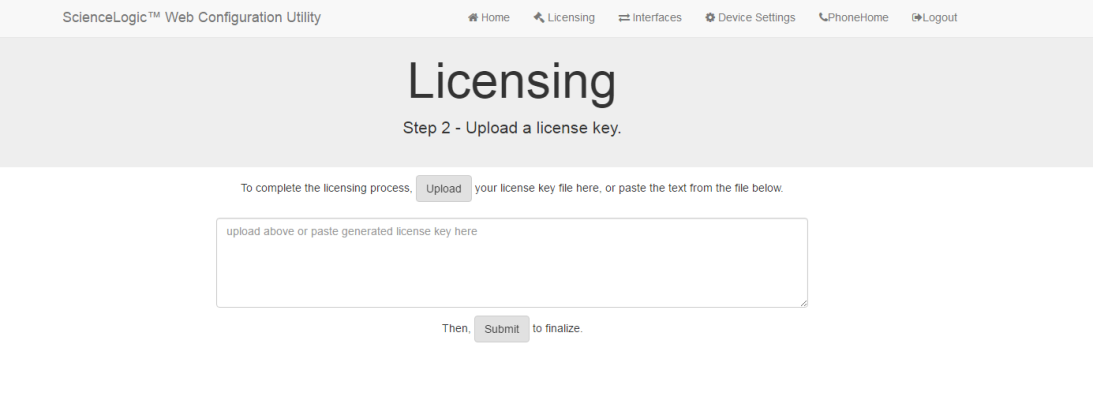

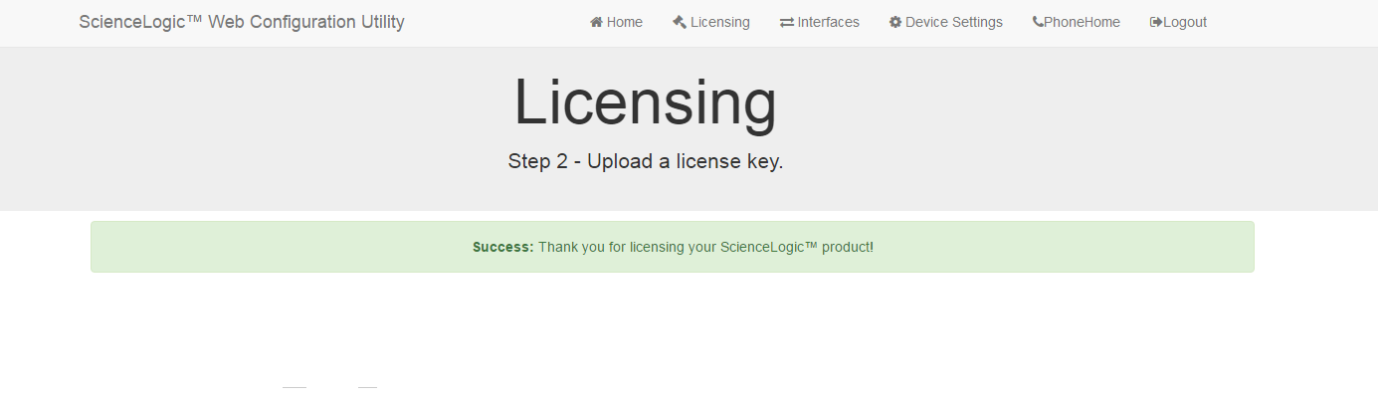

- On the Licensing Step 2 page, click the button to upload the license file. After navigating to and selecting the license file, click the button to finalize the license. The Success message appears:

Upon login, Skylar One will display a warning message if your license is 30 days or less from expiration, or if it has already expired. If you see this message, take action to update your license immediately.

Configuring Data Collection Servers and Message Collection Servers

If you are using a distributed system, you must configure the Data Collectors and Message Collectors to use the new multi-Database Server configuration.

To configure a Data Collector or Message Collector to use the new configuration:

- You can log in to the Web Configuration Utility using any web browser supported by Skylar One. The address of the Web Configuration Utility is in the following format:

https://<ip-address-of-appliance>:7700

Enter the address of the Web Configuration Utility in the address bar of your browser, replacing "ip-address-of-appliance" with the IP address of the Data Collector or Message Collector.

- You will be prompted to enter your user name and password. Log in as the em7admin user with the password you configured using the Setup Wizard.

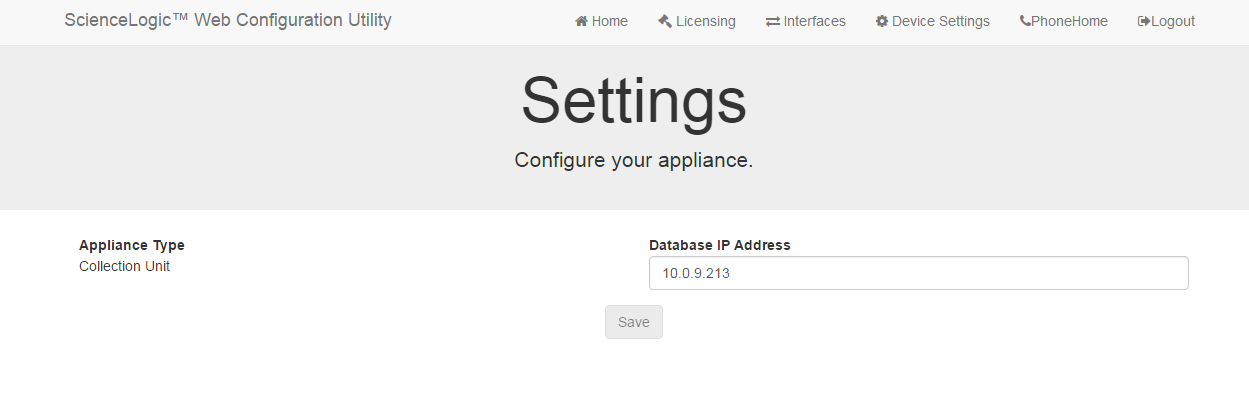

- On the Configuration Utilities page, click the button. The Settings page appears:

- On the Settings page, enter the following:

- Database IP Address. Enter the IP addresses of all the Database Servers, separated by commas.

- Click the button. You may now log out of the Web Configuration Utility for that collector.

- Perform steps 1-5 for each Data Collector and Message Collector in your system.

Starting with Skylar One version 11.3.0, Skylar One will raise a "Collector Outage" event if a Data Collector or Message Collector could not be reached from either a primary or secondary Database Server.

Failover

Failover is the process by which database services are transferred from the active database to the passive database. You can manually perform failover for testing purposes. If the active database stops responding to the secondary database over both network paths, Skylar One will automatically perform failover.

After failover completes successfully, the previously active database is now passive, and the previously passive database is now active. There is no automatic failback process; the newly active database will remain active until a failure occurs, or failover is performed manually.

Manual Failover for High Availability Clusters

To manually failover a High Availability cluster, perform the following steps:

- Log in to the console of the Primary appliance as the em7admin user.

- First, you should check the status of the appliances. To do this, enter the following at the shell prompt:

- Run the following command, assuming root user privileges:

- When prompted, enter the password for the em7admin user.

- The Primary database will be shut down and the Secondary database should automatically promote.

- To verify all services have started on the newly promoted primary database, run the following command:

- After all services have started on the newly promoted primary database, run the following command to complete the failover process:

- To verify that there are two nodes in the cluster, run the following command:

Upon login, Skylar One will display a warning message if your license is 30 days or less from expiration, or if it has already expired. If you see this message, take action to update your license immediately.

drbdadm status

The output for DRBD 9 on Database Servers running Oracle Linux 8 (OL8) will look like the following:

[root@sl1-12x-ha01 em7admin]# drbdadm status

r0 role:Primary

disk:UpToDate

sl1-12x-dr role:Secondary

peer-disk:UpToDate

sl1-12x-ha02 connection:Connecting

The output for Database Servers running Oracle Linux 7 (OL7) will look like the following:

1: cs:Connected ro:Primary/Secondary ds:UpToDate/UpToDate C r----

ns:17567744 al:0 bm:1072 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:12521012

To failover safely, the output should include "ro:Primary/Secondary ds:UpToDate/UpToDate".

If your appliances cannot communicate, your output will include "ro:Primary/Unknown ds:UpToDate/DUnknown". Before proceeding with failback, troubleshoot and resolve the communication problem.

If your output includes "ro:Primary/Secondary", but does not include "UpToDate/UpToDate", data is being synchronized between the two appliances. You must wait until data synchronization has finished before performing failback.

sudo systemctl stop pacemaker

crm_mon

If all services have started, each one should be marked: started.

sudo systemctl start pacemaker

crm_mon

For more information about manual failover for High Availability clusters, see

Verifying that a Database Server is Active

To verify that an appliance is active after failover, ScienceLogic recommends checking the status of MariaDB, which is one of the primary processes on Database Servers.

To verify the status of MariaDB, run the following command on the newly promoted Database Server:

silo_mysql -e "select 1"

If MariaDB is running normally, you will see a '1' in the console output.

To verify that your network is configured correctly and will allow the newly active Database Server to operate correctly, check the following system functions:

- If you use Active Directory or LDAP authentication, log in to the user interface using a user account that uses Active Directory or LDAP authentication.

- In the user interface, verify that new data is being collected.

- If your system is configured to send notification emails, confirm that emails are being received as expected. To test outbound email, create or update a ticket and ensure that the ticket watchers receive an email.

NOTE: On the Behavior Settings page (System > Settings > Behavior, if the field Automatic Ticketing Emails is set to Disabled, all assignees and watchers will not receive automatic email notifications about any tickets. By default, the field is set to Enabled.

- If your system is configured to receive emails, confirm that emails are being received correctly. To test inbound email, send a test email that will trigger a "tickets from Email" policy or an "events from Email" policy.

To complete the verification process, execute the following command on the newly demoted secondary database:

sudo systemctl start pacemaker