A Collector Group is a group of SL1 Data Collectors. Data Collectors retrieve data from managed devices and applications. This collection occurs during initial discovery, during nightly updates, and in response to policies defined for each managed device. The collected data is used to trigger events, display data in the user interface, and generate graphs and reports.

You can group multiple Data Collectors into a Collector Group. Depending on the number of Data Collectors in your SL1 system, you can define one or more Collector Groups. Each Collector Group must include at least one Data Collector.

On the Collector Groups page (Manage > Collector Groups)—or the Collector Group Management page (System > Settings > Collector Groups) in the classic SL1 user interface—you can view a list of existing Collector Groups, add a Collector Group, and edit a Collector Group.

System upgrades will only consider Data Collectors and Message Collectors that are members of a Collector Group.

Grouping multiple Data Collectors allows you to:

- Create a load-balanced collection system, where you can manage more devices without loss of performance. At any given time, the Data Collector with the lightest load manages the next discovered device.

- Optionally, create a redundant, high-availability system that minimizes downtime should a failure occur. If a Data Collector fails, one or more Collection servers in the Collector Group will handle collection until the problem is solved.

NOTE: If you are using a SL1 All-In-One Appliance, most of the sections in this chapter do not apply to your system. For an All-In-One Appliance, a single, default Collector Group is included with the appliance; you cannot create any additional Collector Groups.

Use the following menu options to navigate the SL1 user interface:

- To view a pop-out list of menu options, click the menu icon (

).

- To view a page containing all the menu options, click the Advanced menu icon (

).

This

Installing, Configuring, and Licensing Data Collectors

Before you can create a Collector Group, you must install and license at least one Data Collector. For details on installation and licensing of a Data Collector,

After you have successfully installed, configured, and licensed a Data Collector, the platform automatically adds information about the Data Collector to the Database Server.

Technical Information About Data Collectors

You might find the following technical information about Data Collectors helpful when creating Collector Groups.

Duplicate IP Addresses

A single Collector Group cannot include multiple devices that use the same Admin Primary IP Address (this is the IP address the platform uses to communicate with a device). If a single Collector Group includes multiple devices that use the same Primary IP Address or use the same Secondary IP Address, the platform will generate an event. Best practice is to ensure that within a single Collector Group, all IP addresses on all devices are unique.

- During initial discovery, if a device is discovered with the same Admin Primary IP Address as a previously discovered device in the Collector Group, the later discovered device will appear in the discovery log, but will not be modeled in the platform. That is, the device will not be assigned a device ID and will not be created in the platform. The platform will generate an event specifying that a duplicate Admin Primary IP was discovered within the Collector Group.

- If you try to assign a device to a Collector Group, and the device's Admin Primary IP Address already exists in the Collector Group, the platform will display an error message, and the device will not be aligned with the Collector Group.

Open Ports

By default, Data Collectors accept connections only to the following ports:

- TCP 22 (SSH)

- TCP 53 (DNS)

- TCP 123 (NTP)

- UDP 161 (SNMP)

- UDP 162 (Inbound SNMP Trap)

- UDP 514 (Inbound Syslog)

- TCP 7700 (Web Configuration Utility)

- TCP 7707 (one-way communication from the Database Server)

For increased security, all other ports are closed.

Viewing the List of Collector Groups

To view the list of Collector Groups:

- Go to the Collector Groups page (Manage > Collector Groups.

- The Collector Groups page displays a list of all Collector Groups in your SL1 system. For each Collector Group, the page displays the following:

- ID. Unique numeric identifier automatically assigned by SL1 to each Collector Group.

- Name. Name of the Collector Group.

- Devices Count. Number of devices currently using the Collector Group for data collection.

- Message Collector Count. Number of Message Collector(s) (if any) associated with the Collector Group.

- Data Collector Count. Number of Data Collectors in the Collector Group.

If you do not see one of these columns on the Collector Groups page, click the Select Columns icon (![]() ) to add or remove columns. You can also drag columns to different locations on the page or click on a column heading to sort the list of credentials by that column's values. SL1 retains any changes you make to the columns that appear on the Collector Groups page and will automatically recall those changes the next time you visit the page.

) to add or remove columns. You can also drag columns to different locations on the page or click on a column heading to sort the list of credentials by that column's values. SL1 retains any changes you make to the columns that appear on the Collector Groups page and will automatically recall those changes the next time you visit the page.

You can filter the items on this inventory page by typing filter text or selecting filter options in one or more of the filters found above the columns on the page. For more information, see

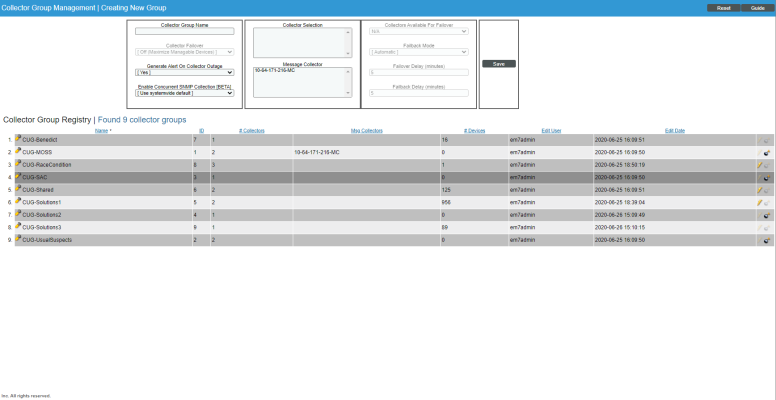

Viewing the List of Collector Groups in the Classic SL1 User Interface

To view the list of Collector Groups

- Go to the Collector Group Management page (System > Settings > Collector Groups).

- The Collector Group Registry pane displays a list of all Collector Groups in your SL1 system. For each Collector Group, the Collector Group Management page displays the following:

- Name. Name of the Collector Group.

- ID. Unique numeric identifier automatically assigned by SL1 to each Collector Group.

- # Collectors. Number of Data Collectors in the Collector Group.

- Msg Collector. Name of the Message Collector(s) (if any) associated with the Collector Group.

- # Devices. Number of devices currently using the Collector Group for data collection.

- Edit User. User who created or last edited the Collector Group.

- Edit Date. Date and time the Collector Group was created or last edited.

Creating a Collector Group

You can group multiple Data Collectors into a Collector Group. Depending on the number of Data Collectors in your SL1 system, you can define one or more Collector Groups. Each Collector Group must include at least one Data Collector.

Pre-Deployment Questions for a Collector Group

Consider the following questions before creating a new Collector Group. Your responses to these questions will help you determine how to create and name your new Collector Group:

- Will your Collector Group span regionally close data centers and be configured for maximum resilience?

- Will your users be required to know your Collector Group naming scheme, or will you provide a general Collector Group for them to use as a default (and use specialized Collector Groups for distinct use cases only)?

- Will your Collector Group be structured for minimum latency to the monitored endpoints?

- Consider the following questions about the resilience of your deployment:

- What happens to the ability to monitor if a data center hosting an entire Collector Group goes offline?

- Is the deployment resilient and will it perform well?

- What is your failure mode? 100% > 0% or !00% > 50% > 0%?

Capacity Planning for a Collector Group

In addition to deciding on your resiliency strategy, look at your failure mode and determine if you are allocating sufficient capacity to achieve a 100% > 50% capacity degradation on a data center failure before failing completely at 0%.

Consider the number of devices in your Collector Group and the number of Data Collectors in your Collector Group to determine if you have overloaded Data Collectors or underpowered Data Collectors.

Defining a Collector Group

To define a new Collector Group:

- Go to the Collector Groups page (Manage > Collector Groups).

- Click the button. The Add Collector Group modal appears.

- On the Add Collector Group modal, complete the following fields:

- Collector Group Name. Type a name for the Collector Group.

- Generate Alert on Collector Outage. Toggle this option on to specify that the platform should generate an event if a Data Collector has an outage, or toggle it off if the platform should not generate an event if a Data Collector has an outage.

- Click .

- To assign devices to the Collector Group, see the section on aligning single devices with a Collector Group and the section on aligning a device group with a Collector Group.

If you need to specify additional Collector Group settings, you can alternatively define a Collector Group in the classic SL1 user interface using the Collector Group Managementpage (System > Settings > Collector Groups.

Defining a Collector Group in the Classic SL1 User Interface

To define a new Collector Group in the classic SL1 user interface:

- Go to the Collector Group Managementpage (System > Settings > Collector Groups).

- In the Collector Group Management page, click the button to clear the values from the fields in the top pane.

- Go to the top pane and enter values in the following fields:

- Collector Group Name. Name of the Collector Group.

- Collector Failover. Specifies whether you want to maximize the number of devices to be managed or whether you want to maximize reliability. Your choices are:

- Off (Maximize Manageable Devices). The Collector Group will be load-balanced only. At any given time, the Data Collector with the lightest load handles the next discovered device. If a Data Collector fails, no data will be collected from the devices aligned with the failed Data Collector until the failure is fixed.

- On (Maximize Reliability). The Collector Group will be load-balanced and configured as a high-availability system that minimizes downtime. If one or more Data Collectors should fail, the tasks from the failed Data Collector will be distributed among the other Data Collectors in the Collector Group.

- Generate Alert on Collector Outage. Specifies whether or not the platform should generate an event if a Data Collector has an outage.

- Enable Concurrent SNMP Collection. Specifies whether you want to enable Concurrent SNMP Collection. Concurrent SNMP Collection uses asynchronous I/O for massive concurrency with lower system resource requirements. This means that Data Collectors can collect more data using fewer system resources. Concurrent SNMP Collection also prevents missed polls and data gaps because collection will execute more quickly. For the selected Collector Group, this field overrides the value in the Behavior Settings page (System > Settings > Behavior). Your choices are:

- Use systemwide default. The Collector Group will use the global settings for Concurrent SNMP Collection configured in the Behavior Settings page (System > Settings > Behavior).

- No. Concurrent SNMP Collection is disabled on this Collector Group regardless of the global setting on the Behavior Settings page.

- Yes. Concurrent SNMP Collection is enabled on this Collector Group regardless of the global setting on the Behavior Settings page.

- Enable Concurrent PowerShell Collection. Specifies whether you want to enable Concurrent PowerShell Collection for this collector group. If you make no selection, the default behavior is to "Use systemwide default", which uses the global setting specified on the Behavior Settings page (System > Settings > Behavior). Your choices are:

- Use systemwide default. The Collector Group will use the global setting for Concurrent PowerShell Collection as it is configured on the Behavior Settings page (System > Settings > Behavior).

- No. Concurrent PowerShell Collection is disabled on this Collector Group regardless of the global setting on the Behavior Settings page.

- Yes. Concurrent PowerShell Collection is enabled on this Collector Group regardless of the global setting on the Behavior Settings page.

- Enable Concurrent Network Interface Collection. Specifies whether you want to enable or disable Concurrent Network Interface Collection for this collector group. If you make no selection, the default behavior is to "Use systemwide default", which uses the global setting specified on the Behavior Settings page (System > Settings > Behavior). Your choices are:

- Use systemwide default. The Collector Group will use the global setting for Concurrent Network Interface Collection as it is configured in the Behavior Settings page (System > Settings > Behavior).

- No. Concurrent Network Interface Collection is disabled on this Collector Group regardless of the global setting on the Behavior Settings page.

- Yes. Concurrent Network Interface Collection is enabled on this Collector Group regardless of the global setting on the Behavior Settings page.

- Collector Selection. Displays a list of available Data Collectors.

- To assign an available Data Collector server to the Collector Group, simply highlight it. You can assign one or more Data Collectors to a Collector Group.

- To assign multiple Data Collectors to the Collector Group, hold down the <Ctrl> key and click multiple Data Collectors.

- Message Collector. Displays a list of available Message Collectors.

- To assign an available Message Collector to the Collector Group, simply highlight it. You can assign one or more Message Collectors to a Collector Group.

- To assign multiple Message Collectors to the Collector Group, hold down the <Ctrl> key and click multiple Message Collectors.

- Note that a single Message Collector can be used by multiple Collector Groups.

NOTE: When you align a single Message Collector with multiple Collector Groups, the single Message Collector might then be aligned with two devices (each in a separate Collector Group) that use the same primary IP address or the same secondary IP address. If this happens, SL1 will generate an event.

- Collectors Available for Failover. Applies only if you selected "On (Maximize Reliability)" in the Collector Failover field. Specifies the minimum number of Data Collectors that must be available (i.e. with a status of "Available [0]") before a Data Collector failover may occur.

- For collector groups with only two Data Collectors, this field will contain the value "1 collector".

- For collector groups with more than two Data Collectors, the field will contain values from a minimum of one half of the total number of Data Collectors up to a maximum of one less than the total number of Data Collectors.

- For example, for a collector group with eight Data Collectors, the possible values in this field would be 4, 5, 6, and 7.

- SL1 will never automatically increase the maximum number of Data Collectors that can fail in a Collector Group. For example, suppose you have a collector group with three Data Collectors. Suppose Collectors Available For Failover field is set to "2". If you add a fourth Data Collector to the collector group, SL1 will automatically set the Collectors Available For Failover field to "3" to maintain the maximum number of Data Collectors that can fail as "one". However, you can override this automatic setting by manually changing the value in the Collectors Available For Failover field.

If you set this to half of your available Data Collectors and a 50% Data Collector outage occurs and the remaining Data Collectors are down by one, no rebalance will occur. If you specify one-third of the total number of Data Collectors, then a rebalance will be attempted until your overall capacity falls below one-third of your Data Collectors, thereby maximizing your resiliency but minimizing the opportunity for your system to enter an unproductive rebalancing loop.

If the number of available Data Collectors is less than the value in the Collectors Available For Failover field, SL1 will not failover within the Collector Group. SL1 will not collect any data from the devices aligned with the failed Data Collector(s) until the failure is fixed on enough Data Collector(s) to equal the value in the Collectors Available For Failover field. EM7 will generate a critical event.

- Failback Mode. Applies only if you selected On (Maximize Reliability) in the Collector Failover field. Specifies how you want collection to behave when the outage is fixed. You can specify one of the following:

- Automatic. After the failed Data Collector is restored, SL1 will automatically redistribute data-collection tasks among the Collector Group, including the previously failed Data Collector.

- Manual. After the failed Data Collector is restored, you will manually prompt Data Collector to redistribute data-collection tasks by clicking the lightning bolt icon (

) for the Collector Group.

) for the Collector Group.

- Failover Delay (minutes). Applies only if you selected On (Maximize Reliability) in the Collector Failover field. Specifies the number of minutes SL1 should wait after the outage of a Data Collector before redistributing the data-collection tasks among the other Data Collectors in the group. During this time, data will not be collected from the devices aligned with the failed Data Collector(s). The default minimum value for this field is 5 minutes.

- Failback Delay (minutes). Applies only if you selected On (Maximize Reliability) in the Collector Failover field and Automatic in the Failback Mode field. Specifies the number of minutes SL1 should wait after the failed Data Collector is restored before redistributing data-collection tasks among the Collector Group, including the previously failed Data Collector. The default minimum value for this field is 5 minutes.

- Click the button to save the new Collector Group.

- To assign devices to the Collector Group, see the section on aligning single devices with a Collector Group in the Classic SL1 User Interface and the section on aligning a device group with a Collector Group.

Editing a Collector Group

To edit a Collector Group:

- Go to the Collector Groups page (Manage > Collector Groups).

- Click the Actions icon (

) of the Collector Group you want to edit and then select Edit. The Edit Collector Group modal appears.

- The fields in the Edit Collector Group modal are populated with values from the selected Collector Group. You can edit one or more of the fields. For a description of each field, see the section on Defining a Collector Group.

- Click to save any changes to the Collector Group.

Editing a Collector Group in the Classic SL1 User Interface

From the Collector Group Management page, you can edit an existing Collector Group. You can add or remove Data Collectors and change the configuration from load-balanced to failover (high availability).

To edit a Collector Group in the classic SL1 user interface:

- Go to the Collector Group Management page (System > Settings > Collector Groups).

- In the Collector Group Management page, go to the Collector Group Registry pane at the bottom of the page.

- Find the Collector Group you want to edit. Click its wrench icon (

).

). - The fields in the top pane are populated with values from the selected Collector Group. You can edit one or more of the fields. For a description of each field, see the section on Defining a Collector Group in the Classic SL1 User Interface.

- Click the button to save any changes to the Collector Group.

Collector Groups and Load Balancing

To perform initial discovery, SL1 uses a single, selected Data Collector from the Collector Group. This allows you to troubleshoot discovery if there are any problems.

After each discovered device is modeled (that is, after SL1 assigns a device ID and creates the device in the database), SL1 distributes devices among the Data Collectors in the Collector Group. The newest device is assigned to the Data Collector currently managing the lightest load.

This process is known as Collector load balancing, and it ensures that the work performed by the Dynamic Applications aligned to the devices is evenly distributed across the Data Collectors in the Collector Group.

SL1 performs Collector load balancing in the following circumstances:

- A new Data Collector is added to a Collector Group

- New devices are discovered

- Failover or failback occurs within a Collector Group (if failover is enabled)

- A user clicks the lightning bolt icon (

) for a Collector Group to manually force redistribution

) for a Collector Group to manually force redistribution - Devices in DCM or DCM-R trees will be loaded on the Data Collector currently assigned to the DCM or DCM-R tree rather than being distributed across the Collector Group. DCM or DCM-R trees will be rebalanced as an aggregate when rebalancing occurs to an available Data Collector with sufficient capacity to sustain the load.

The lightning bolt icon ( ) appears only for Collector Groups that contain more than one Data Collector. For Collector Groups with only one Data Collector, this icon is grayed out. This icon does not appear for All-In-One Appliances.

) appears only for Collector Groups that contain more than one Data Collector. For Collector Groups with only one Data Collector, this icon is grayed out. This icon does not appear for All-In-One Appliances.

When all of the devices in a Collector Group are redistributed, SL1 will assign the devices to Data Collectors so that all Data Collectors in the collector group will spend approximately the same amount of time collecting data from devices.

Collector load balancing uses two metrics:

- Device Rating. A device's rating is the total elapsed time consumed by either 1) all of the Dynamic Applications aligned to the device, or 2) collecting metrics from the device's interfaces, whichever is greater. A Collector's load is the sum of the ratings of the devices assigned to the Collector. The balancer tries to evenly divide the work performed by Collectors by assigning devices to Collectors using the device ratings and Collector loads.

- Collector Load. The sum of the device ratings for all of the devices assigned to a collector.

SL1 performs the following steps during Collector load balancing:

- Searches for all devices that are not yet assigned to a Collector Group.

- Determines the load on each Data Collector by calculating the device rating for each device on a Data Collector and then summing the device ratings.

- Determines the number of new devices (less than one day old) and old devices on each Data Collector.

- On each Data Collector, calculates the average device rating for old devices (sum of the device ratings for all old devices divided by the number of old devices). If there are no old devices, sets the average device rating to "1" (one).

- On each Data Collector, assigns the average device rating to all new devices (devices less than one day old).

- Assigns each unassigned device (either devices that are not yet assigned or devices on a failed Data Collector) to the Data Collector with the lightest load. Add each newly assigned device rating to the total load for the Data Collector.

The following video explains collector load and sizing:

Tuning Collector Groups in the silo.conf File

With the addition of execution environments to SL1, SL1 sorts data collections in to a two-process-pool model.

SL1 sorts collection requests into groups by execution environment. These groups of collection requests are called "chunks". Each chunk contains a maximum of 200 collection requests, all of which use the same execution environment. SL1 sends each chunk to a chunk worker.

The chunk worker determines the appropriate execution environment for the chunk, deploys the execution environment, and starts a pool of request workers in the execution environment.

The request workers then process the actual collection requests contained in the chunks and perform the actual data collection.

For more information about ScienceLogic Libraries and execution environments, see

The following settings are available in the master.system_settings_core database table for tuning globally in a stack, or in the Silo.Conf file for tuning locally on a single Data Collector:

| Parameter Name | Description | Runtime Default |

|---|---|---|

| dynamic_collect_num_chunk_workers | The number of chunk workers. In general, this value controls the number of PowerPacks that can be processed in parallel. | 2 |

| dynamic_collect_num_request_workers | The maximum number of request workers in each worker pool. In general, this value controls the number of collections within a PowerPack that can be processed in parallel. | "2" or the number of cores on the Data Collector, whichever is greater |

| dynamic_collect_request_chunk_size | The maximum number of collection requests in a chunk. This value controls how many collections are processed by each pool of requests workers. | 200 |

The database values for these parameters are "Null" by default, which specifies that SL1 should use the runtime defaults.

The maximum total number of worker processes used during a scheduled collection is generally dynamic_collect_num_chunk_workers X dynamic_collect_num_request_workers.

There might be circumstances where adjustment is necessary to improve the performance of collection.

Example 1: Additional Environments Required

You might need to adjust the values of the collection processes when scheduled collection requires more than two environments.

Because the default number of chunk workers is "2", SL1 can simultaneously process chunks of collection requests for a maximum of two virtual environments. If the collection requests require more than two virtual environments, you can increase parallelism by setting dynamic_collect_num_chunk_workers to match the number of environments.

If you increase dynamic_collect_num_chunk_workers, you might want to decrease dynamic_collect_num_request_workers to avoid performance problems caused by too many request workers.

If you cannot increase dynamic_collect_num_chunk_workers because doing so would result in too many request workers, you can decrease dynamic_collect_request_chunk_size to give collection requests for each environment a "fairer share" of the chunk workers.

Smaller chunk sizes require more resources to establish the virtual environments and establish more polls of request workers to process the chunks. Conversely, if you want to use fewer resources for establishing virtual environments and creating pools of request worker pools, and you want to use more resources for collection itself, increasing dynamic_collect_request_chunk_size allows more collection requests to be processed by each pool of request workers.

Example 2: Input/Output Bound Collections

You might need to adjust the values of the collection processes when collection requests are input/output (I/O) bound with relatively large latencies.

In this scenario, you can increase dynamic_collect_num_request_workers to improve parallelism. If you increase dynamic_collect_num_request_workers, you might want to decrease dynamic_collect_num_chunk_workers to avoid performance problems caused by too many request workers.

Increasing the number of collection processes will increase CPU and memory utilization on the Data Collector, so be careful when increasing the values dramatically.

Before adjusting dynamic_collect_num_request_workers, you need to know the following information:

- The number of CPU cores in the Data Collector

- The current CPU utilization of Data Collector

- The current memory utilization of Data Collector

Start by setting dynamic_collect_num_request_workers to equal the number of CPUs plus 50%. For example: with 8 cores, start by setting dynamic_collect_num_request_workers to 12. If that is insufficient, you can then try 16, 20, 24, and so forth.

If data collections are terminating early, it means that collections are not completed within the 15-minute limit. If this is the case, wait 30 minutes to see results after adjusting the collection values.

Collector Affinity

Collector Affinity specifies the Data Collectors that are allowed to run collection for Dynamic Applications aligned to component devices. You can define Collector Affinity for each Dynamic Application. Choices are:

- Default. If the Dynamic Application is auto-aligned to a component device during discovery, then the Data Collector assigned to the root device will collect data for this Dynamic Application as well. For devices that are not component devices, the Data Collector assigned to the device running the Dynamic Application will collect data for the Dynamic Application.

- Root Device Collector. The Data Collector assigned to the root device will collect data for the Dynamic Application. This guarantees that Dynamic Applications for an entire DCM tree will be collected by a single Data Collector. You might select this option if:

- The Dynamic Application has a cache dependency with one or more other Dynamic Applications.

- You are unable to collect data for devices and Dynamic Applications within the same Device Component Map on multiple Data Collectors in a collector group.

- If the Dynamic Application will consume cache produced by a Dynamic Application aligned to a non-root device (for instance, a cluster device).

- Assigned Collector. The Dynamic Application will use the Data Collector assigned to the device running the Dynamic Application. This allows Dynamic Applications that are auto-aligned to component devices during discovery to run on multiple Data Collectors. This is the default setting. You might select this option if:

- The Dynamic Application has no cache dependencies with any other Dynamic Applications.

- You want the Dynamic Application to be able to make parallel data requests across multiple Data Collectors in a collector group.

- The Dynamic Application can be aligned using mechanisms other than auto-alignment during discovery (for instance, manual alignment or alignment via Device Class Templates or Run Book Actions).

Failover for Collector Groups for Component Devices

If you specified Default or Root Device Collector for Dynamic Applications, and the single Data Collector in the Collector Group for component devices fails, users must create a new Collector Group with a single Data Collector and manually move the devices from the failed Collector Group to the new Collector Group. For details on manually moving devices to a new Collector Group, see the section on Changing the Collector Group for One or More Devices.

Collector Groups for Merged Devices

You can merge a physical device and a component device. There are two ways to do this:

- From the Actions menu in the Device Properties page (Devices> Device Manager > wrench icon) for either the physical device or the component device.

- From the Actions menu in the Device Manager page (Devices > Device Manager), select Merge Devices to merge devices in bulk.

You can unmerge a component device from a physical device. You can do this in two ways:

- From the Actions menu in the Device Properties page (Devices> Device Manager > wrench icon) for either the physical device or the component device, , select Unmerge Devices to unmerge devices.

- From the Actions menu in the Device Manager page Devices > Device Manager), select Unmerge Devices to unmerge devices in bulk.

When you merge a physical device and a component device, the device record for the component device is no longer displayed in the user interface; the device record for the physical device is displayed in user interface pages that previously displayed the component device. For example, the physical device is displayed instead of the component device in the Device Components page (Devices > Device Components) and the Component Map page (Device Component Map). All existing and future data for both devices will be associated with the physical device.

If you manually merge a component device with a physical device, SL1 allows data for the merged component device and data from the physical device to be collected on different Data Collectors. Data that was aligned with the component device can be collected by the Collector Group for its root device. Data aligned with the physical device can be collected by a different Collector Group.

NOTE: You can merge a component device with only one physical device.

Creating a Collector Group for Data Storage Only

From the Collector Group Management page, you can create a Virtual Collector Group that serves as a storage area for all historical data from decommissioned devices.

The Virtual Collector Group will store all existing historical data from all aligned devices, but will not perform collection on those devices. The Virtual Collector Group will not contain any Data Collectors or any Message Collectors. SL1 will stop collecting data from devices aligned with a Virtual Collector Group.

To define a Virtual Collector Group:

- Go to the Collector Group Management page (System > Settings > Collector Groups).

- In the Collector Group Management page, click the button to clear values from the fields in the top pane.

- Go to the top pane and enter a name for the virtual Collector Group in the Collector Group Name field.

- Leave all other fields set to the default values. Do not include any Data Collectors or Message Collectors in the Collector Group.

- Click the button to save the new Collector Group.

- To assign devices to the virtual Collector Group, see the section on aligning single devices with a Collector Group and the section on aligning a device group with a Collector Group.

Deleting a Collector Group

To delete a Collector Group:

- Go to the Collector Groups page (Manage > Collector Groups).

- Click the Actions icon (

) of the Collector Group you want to delete and then select Delete.

Deleting a Collector Group in the Classic SL1 User Interface

From the Collector Group Management page, you can delete a Collector Group. When you delete a Collector Group, those Data Collectors become available for use in other Collector Groups.

NOTE: Before you can delete a Collector Group, you must move all aligned devices to another Collector Group. For details on how to do this, see the section Changing the Collector Group for One or More Devices.

To delete a Collector Group

- Go to the Collector Group Management page (System > Settings > Collector Groups).

- In the Collector Group Management page, go to the Collector Group Registry pane at the bottom of the page.

- Find the Collector Group you want to delete. Click its bomb icon (

).

).

Assigning a Collector Group for a Single Device

After you have defined a Collector Group, you can align devices with that Collector Group.

To assign a Collector Group to a device:

- From the Devices page, click the name of the device that you want to assign to a Collector Group. The Device Investigator page opens for that device.

- On the Device Investigator page, click the tab.

- Click the button. This enables you to change your device settings.

- In the Collection Poller field, select the name of Collector Group you want to use for collection on the device.

- Click .

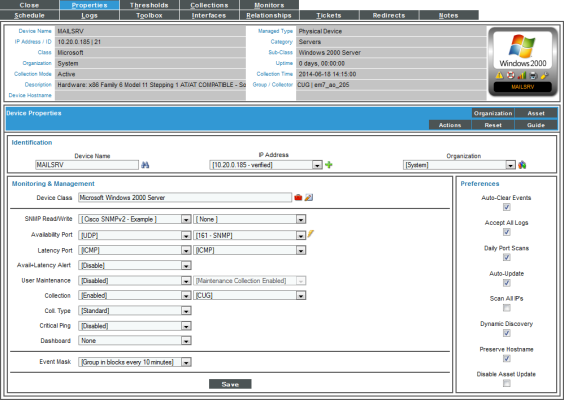

Assigning a Collector Group for a Single Device in the Classic SL1 User Interface

After you have defined a Collector Group, you can align devices with that Collector Group.

To assign a Collector Group to a device in the classic SL1 user interface:

- Go to the Device Manager page (Devices > Device Manager).

- In the Device Manager page, find the device you want to edit. Click its wrench icon (

). The Device Properties page appears:

). The Device Properties page appears:

- In the Device Properties page, you can select a Collector Group from the Collection fields.

- Click the button to save the change to the device.

Aligning the Collector Group in a Device Template

You can specify a Collector Group in a device template. Then, when you apply the device template to a device, either through discovery or when you apply the device template to a device group or selection of devices, the specified Collector Group is automatically associated with the device(s). Optionally, you can later edit the Collector Group for each device.

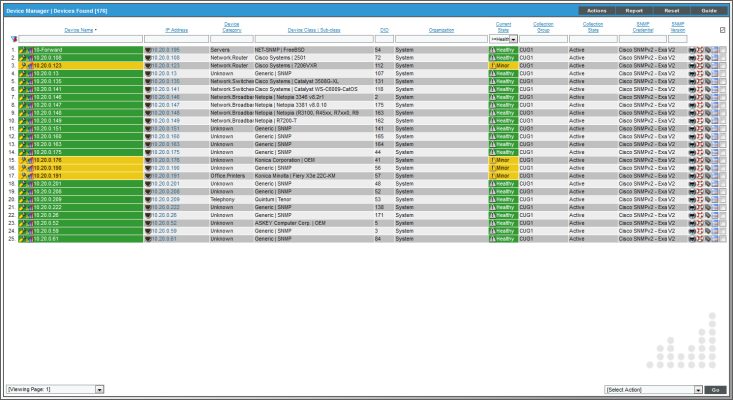

Changing the Collector Group for One or More Devices

You can change the Collector Group for multiple devices simultaneously. This is helpful if you want to reorganize devices or Collector Groups. If you want to delete a Collector Group, you first must first move each aligned device to another Collector Group. In this situation, you might want to change the Collector Group for multiple devices simultaneously.

To change the Collector Group for multiple device simultaneously:

- Go to the Device Manager page (Devices > Device Manager).

- In the Device Manager page, click on the heading for the Collection Group column to sort the list of devices by Collector Group.

- Select the checkbox for each device that you want to move to a different Collector Group.

- In the Select Action field (in the lower right), go to Change Collector Group and select a Collector Group.

- Click the button. The selected devices will now be aligned with the selected Collector Group.

Managing the Host Files for a Collector Group

The Host File Entry Manager page allows you to edit and manage host files for all of your Data Collectors from a single page in the SL1 system. When you create or edit an entry in the Host File Entry Manager page, SL1 automatically sends an update to every Data Collector in the specified Collector Group.

The Host File Entry Manager page is helpful when:

- The SL1 system does not reside in the end-customer's domain

- The SL1 system does not have line-of-sight to an end-customer's DNS service

- A customer's DNS service cannot resolve a host name for a device that the SL1 system monitors

For details, see the section on Managing Host Files.

Processes for Collector Groups

For troubleshooting and debugging purposes, you might find it helpful to understand the ScienceLogic processes that affect a Collector Group.

NOTE: You can view the list of all processes and details for each process in the Process Manager page (System > Settings > Admin Processes).

- The Enterprise Database: Collector Task Manager process (em7_ctaskman) process distributes devices between Data Collectors in a Collector Group, to load-balance the collection tasks. The process runs every 60 seconds and also checks the license on each Data Collector. The Enterprise Database: Collector Task Manager process (em7_ctaskman.py) redistributes devices between collectors when:

- A Collector Group is created.

- A new Data Collector is added to a Collector Group.

- Failover or failback occurs within a Collector Group.

- A user clicks on the lightning bolt icon (

) for a Collector Group, to manually force redistribution.

) for a Collector Group, to manually force redistribution.

- The Enterprise Database: Collector Data Pull processes retrieves information from each Data Collector in a Collector Group. The process pulls data from the in_storage tables on each Data Collector. The retrieved information is stored in the Database Server.

- Enterprise Database: Collector Data Pull, High F (em7_hfpulld). Retrieves data from each Data Collector every 15 seconds (configurable).

- Enterprise Database: Collector Data Pull, Low F (em7_lfpulld). Retrieves data from each Data Collector every five minutes.

- Enterprise Database: Collector Data Pull, Medium (em7_mfpulld). Retrieves data from each Data Collector every 60 seconds.

- The Enterprise Database: Collector Config Push process (config_push.py) updates each Data Collector with information on system configuration, configuration of Dynamic Applications, and any new or changed policies. This process runs once every 60 seconds and checks for differences between the configuration tables on the Database Server and the configuration tables on each Data Collector. The list of tables to be synchronized is stored in master.definitions_collector_config_tables on the Database Server.

- Asynchronous Processes (for example, discovery or programs run from the Device Toolbox page). Asynchronous processes need to be run immediately and cannot wait until the Enterprise Database: Collector Config Push process (config_push.py) runs and tells the Data Collector to run the asynchronous process. Therefore, SL1 uses a stored procedure and the EM7 Core: Task Manager process (em7) to trigger asynchronous processes on both the Database Server and Data Collector.

- If a user requests an asynchronous process, a stored procedure on the Database Server inserts a new row in the table master_logs.spool_process on the Database Server.

- Every three seconds, the EM7 Core: Task Manager process (proc_mgr.py) checks the table master_logs.spool_process on the Database Server for new rows.

- If the asynchronous process needs to be started on a Data Collector, a stored procedure on the Database Server inserts the same row into the table master_logs.spool_process on the Data Collector.

- Every three seconds, the EM7 Core: Task Manager process (em7) checks the table master_logs.spool_process on the Data Collector for new rows.

- If the EM7 Core: Task Manager process (em7) on the Data Collector finds a new row, the specified asynchronous process is executed on the Data Collector.

Enabling and Disabling Concurrent PowerShell for Collector Groups

To improve the process of collecting data via PowerShell, you can enable Concurrent PowerShell Collection. Concurrent PowerShell Collection allows multiple collection tasks to run at the same time with a reduced load on Data Collectors. Concurrent PowerShell Collection also prevents missed polls and data gaps because collection will execute more quickly. As a result, Data Collectors can collect more data using fewer system resources.

When you use the PowerShell Collector for Concurrent PowerShell Collection, the collection process can bypass failed or paused collections, reduce collection time, and reduce the number of early terminations (sigterms) that occur with data collection. The PowerShell Collector is an independent service running as a container on a Data Collector.

You can enable one or more Collector Groups to use concurrent PowerShell collection, and you can collect metrics for concurrent PowerShell collection.

NOTE: Concurrent PowerShell Collection is for PowerShell Performance and Performance Configuration Dynamic Application types and does not include Snippet Dynamic Applications which happen to run PowerShell commands.

For more details on concurrent PowerShell collection, see

Enabling Concurrent PowerShell on All Collector Groups

To enable concurrent PowerShell collection service for all collector groups:

-

Go to the Database Tool page (System > Tools > DB Tool).

The Database Tool page does not display for users that do not have sufficient permissions for that page.

-

Enter the following in the SQL Query field:

INSERT INTO master.system_custom_config (`field`, `field_value`) VALUES ('enable_powershell_service', '1');

Disabling Concurrent PowerShell on All Collector Groups

To disable concurrent PowerShell collection service for all collector groups:

- Go to the Database Tool page (System > Tools > DB Tool).

- Enter the following in the SQL Query field:

The Database Tool page does not display for users that do not have sufficient permissions for that page.

UPDATE master.system_custom_config SET field_value=0 where field='enable_powershell_service';

Enabling Concurrent PowerShell on a Specific Collector Group

To enable concurrent PowerShell collection for a specific collector group:

-

Go to the Database Tool page (System > Tools > DB Tool).

The Database Tool page does not display for users that do not have sufficient permissions for that page.

-

Enter the following in the SQL Query field:

INSERT INTO master.system_custom_config (`field`, `field_value`, `cug_filter`) VALUES ('enable_powershell_service_CUGx', '1', 'collector_group_ID');

where:

collector_group_ID is the collector group ID. You can find this value in the Collector Group Management page (System > Settings > Collector Groups).

Disabling Concurrent PowerShell on a Specific Collector Group

To disable concurrent PowerShell collection for a specific collector group:

- Go to the Database Tool page (System > Tools > DB Tool).

- Enter the following in the SQL Query field:

The Database Tool page does not display for users that do not have sufficient permissions for that page.

UPDATE master.system_custom_config SET field_value=0 where field='enable_powershell_service_CUGx';

where:

collector_group_ID is the collector group ID. You can find this value in the Collector Group Management page (System > Settings > Collector Groups).

Enabling and Disabling Concurrent SNMP for Collector Groups

To increase the scale for SNMP collection, you can enable Concurrent SNMP Collection. Concurrent SNMP Collection uses the standalone container called the SL1 SNMP Collector.

The SNMP Collector is an independent service that runs as a container on a Data Collector. When you enable Concurrent SNMP Collection, each Data Collector will contain four (4) SNMP Collector containers.

On each Data Collector, SL1 will restart each of the SNMP Collector containers periodically to ensure that each container remains healthy. When one SNMP Collector container is restarted, the other three SNMP Collector containers continue to handle the workload.

With Concurrent SNMP Collection, SNMP collection tasks can run in parallel. A single failed task will not prevent other tasks from completing.

Concurrent SNMP Collection provides:

- Improved throughput for SNMP Dynamic Applications

- Reduced use of resources on each Data Collector

- More dependable collection from high-latency Devices

Enabling and Disabling Concurrent SNMP for All Collector Groups

This feature is disabled by default.

To enable Concurrent SNMP Collection in SL1:

-

Go to the Behavior Settings page (System > Settings > Behavior).

- Check the Enable Concurrent SNMP Collection field.

- Click .

If you do not want all of your SL1 Collectors to use Concurrent SNMP Collection, you can specify which Collector Units should use it in Enabling a Collector Group to Use Concurrent SNMP Collection.

Enabling and Disabling Concurrent SNMP for Collector Groups

Depending on the needs of your SL1 environment, you can enable or prevent a Collector Group from using concurrent SNMP collection.

To enable Concurrent SNMP Collection with a SL1 Collector Group:

-

Go to the Collector Group Management Page (System > Settings > Collector Groups):

- Click the wrench icon (

) for the Collector Group you want to edit. The fields at the top of the page are updated with the data for that Collector Group.

) for the Collector Group you want to edit. The fields at the top of the page are updated with the data for that Collector Group. -

Select an option in the Enable Concurrent SNMP Collection dropdown:

- Use system-wide default. Select this option if you want this Collector Group to use or not use Concurrent SNMP Collection based on the Enable Concurrent SNMP Collection field on the Behavior Settings page. This is the default.

- Yes. Select this option to enable Concurrent SNMP Collection for this Collector Group, even if you did not enable it on the Behavior Settings page.

- No. Select this option to prevent this Collector Group from using Concurrent SNMP Collection, even if you did enable it on the Behavior Settings page.

- Update the remaining fields as needed, and then click .