This

After you create steps, you can use them with other steps in one or more PowerFlow applications.

You can also use the PowerFlow user interface to perform many of the actions in this chapter. For more information, see Managing SL1 PowerFlow Applications.

All Python step code should be Python 3.7 or later.

What is a Step?

In PowerFlow, a step is a generic Python class that performs a single action, such as gathering data about an organization. The following image shows a step from the PowerFlow user interface:

Using Steps in a PowerFlow Application

You can create new steps or use existing steps to create your own workflows, and you can re-use steps in more than one workflow. When these steps are combined as part of a PowerFlow application, they provide a workflow that satisfies a business requirement.

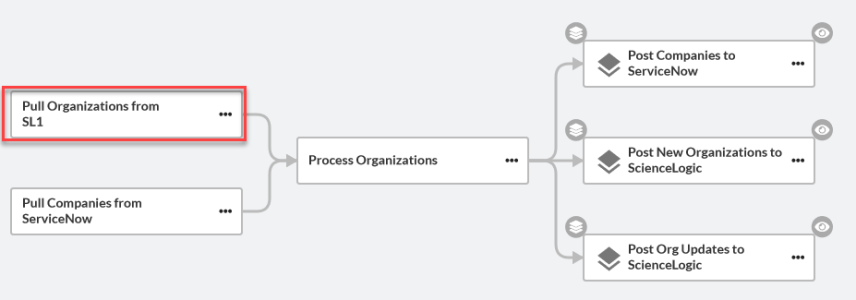

For example, the set of steps below in the "Sync Organizations from SL1 to ServiceNow Companies" application in the PowerFlow user interface gathers data about SL1 organizations and ServiceNow companies, processes that data based on the configuration settings specified for that set of steps, and posts that data to SL1 and ServiceNow to keep the organization and company data in sync in both places:

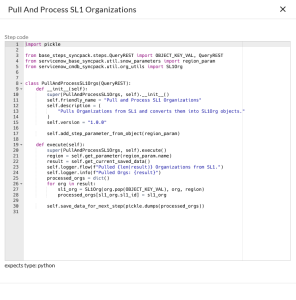

In the PowerFlow builder user interface, if you click the ellipsis icon (![]() ) on a step in the PowerFlow builder, you can select View step code to view the Python code for that step:

) on a step in the PowerFlow builder, you can select View step code to view the Python code for that step:

Using Input Parameters to Configure a Step

You can configure how a step works by adjusting a set of arguments called input parameters. The parameters specify the values, variables, and configurations to use when executing the step. Parameters allow steps to accept arguments and allow steps to be re-used in multiple integrations.

For example, you can use the same step to query both the local system and another remote system; only the arguments, such as hostname, username, and password change.

To view and edit the input parameters for a step:

-

Go to the Applications page of the PowerFlow user interface and click the name of a PowerFlow application.

-

Click the button.

-

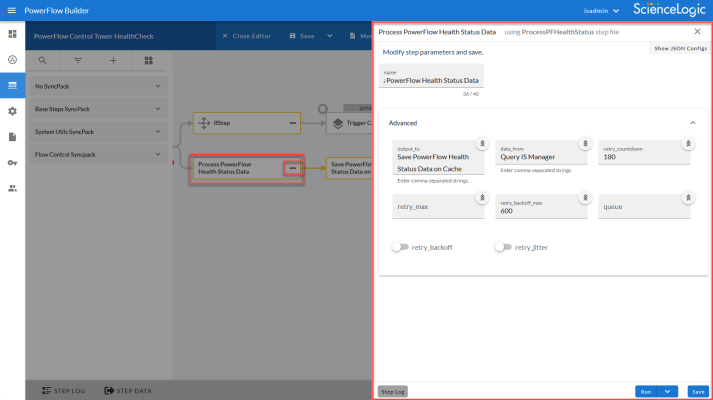

Click the ellipsis icon (

) on the step and select Configure. The Configuration pane for that step appears:

) on the step and select Configure. The Configuration pane for that step appears:If your current ScienceLogic SL1 solution subscription does not include the SL1 PowerFlow builder, contact your ScienceLogic Customer Success Manager or Customer Support to learn more.

Sharing Data Between Steps

A step can pass the data it generates during execution to a subsequent step. A step can use the data generated by another step. Also, you can run test data for that step by hovering over the button and selecting Custom Run.

PowerFlow analyzes the required parameters for each step and alerts you if any required parameters are missing before running the step.

Types of Steps

Steps are grouped into the following types:

- Standard. Standard steps do not require any previously collected data to perform. Standard steps are generally used to generate data to perform a transformation or a database insert. These steps can be run independently and concurrently.

- Aggregated. Aggregated steps require data that was generated by a previously run step. Aggregated steps are not executed by PowerFlow until all data required for the aggregation is available. These steps can be run independently and concurrently.

- Trigger. Trigger steps are used to trigger other PowerFlow applications. These steps can be configured to be blocking or not.

A variety of generic steps are available from ScienceLogic, and you can access a list of steps by sending a GET request using the API /steps endpoint.

Workflow for Creating a Step

To create a custom step without using the PowerFlow user interface:

- Download or copy the step template, called stepTemplate.

- Set up the required classes and methods in the step.

- Define logic for the step, including transferring data between steps.

- Define parameters for the step.

- Define logging for the step.

- Define exceptions for the step.

- Upload the step to PowerFlow.

- Validate and test the step.

Creating a Step from the Step Template

The easiest way to create a new step is to use the step template that is included with PowerFlow. To copy this template to your desktop:

-

Using an API tool like Postman or cURL, use the API GET /steps/{step_name}:

GET <URL_for_PowerFlow>/api/v1/steps/stepTemplate

where <URL_for_PowerFlow> is the IP address or URL for PowerFlow.

For example:

https://10.1.1.111/api/v1/steps/stepTemplate

-

Select and copy all the text from the "data" field in the stepTemplate.

-

Open a source-code editor and paste the content of the stepTemplate in the source-code editor.

-

Save the new file as newfilename.py where newfilename.py is the new name of the step and includes the .py suffix.

The file name must be unique within your PowerFlow system and cannot contain spaces. Note that the step name will also be the name of the Python class for the step.

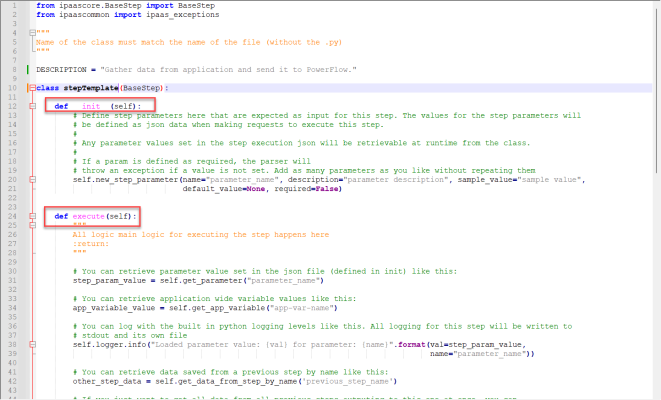

Example Code: stepTemplate

You can also copy the following code from the stepTemplate.py file and paste it into a source-code editor to create your own template file:

from ipaascore.BaseStep import BaseStep

from ipaascommon import ipaas_exceptions

"""

Name of the class must match the name of the file (without the .py)

"""

DESCRIPTION = "A brief description of what this step will do. This will be visibile from the GUI"

class stepTemplate(BaseStep):

def __init__(self):

# Define step parameters here that are expected as input for this step. The values for the step parameters will

# be defined as json data when making requests to execute this step.

#

# Any parameter values set in the step execution json will be retrievable at runtime from the class.

#

# If a param is defined as required, the parser will

# throw an exception if a value is not set. Add as many parameters as you like without repeating them

self.new_step_parameter(name="parameter_name", description="parameter description", sample_value="sample value",

default_value=None, required=False)

def execute(self):

"""

All logic main logic for executing the step happens here

:return:

"""

# You can retrieve parameter value set in the json file (defined in init) like this:

step_param_value = self.get_parameter("parameter_name")

# You can retrieve application wide variable values like this:

app_variable_value = self.get_app_variable("app-var-name")

# You can log with the built in python logging levels like this. All logging for this step will be written to

# stdout and its own file

self.logger.info("Loaded parameter value: {val} for parameter: {name}".format(val=step_param_value,

name="parameter_name"))

# You can retrieve data saved from a previous step by name like this:

other_step_data = self.get_data_from_step_by_name('previous_step_name')

# If you just want to get all data from all previous steps outputing to this one at once, you can

# use the data merge helper:

all_step_data_combined = self.join_previous_step_data()

# Perform whatever logic you want here

# In the event of a failure, you can raise any exception to exit. We recommend using the StepFailedException but

# this is not required

# raise ipaas_exceptions.StepFailedException("Error occurred")

# You can save data generated from this step which will automatically be available in subsequent steps like so:

save_data = {'key': 'value'}

self.save_data_for_next_step(save_data)

Including the Subclass and Required Methods

To execute successfully on PowerFlow, your step must be a subclass of the ipaascore.BaseStep class. The ipaascore.BaseStep class is a Python class that is included with PowerFlow, and it contains multiple predefined functions that you can use when you are writing or editing a step.

Your new step must include the init method and the execute method. These methods are explained in the Required Methods section, below.

Subclass

The stepTemplate.py file is already configured to include the new step as a subclass of the ipaascore.BaseStep class. To update your step:

-

Use a source-code editor to open the new .py file for editing.

-

Notice that the file includes these lines of text:

from ipaascore.BaseStep import BaseStep

from ipaascommon import ipaas_exceptions

Do not remove or alter these lines of text.

-

Search for the following:

class stepTemplate(BaseStep):

-

Replace stepTemplate with the new name of the file (without the .py suffix).

-

Save and close the file.

Required Methods

To execute successfully on PowerFlow, your step must contain at least these two methods:

- init method. This method lets you define initialization options and parameters for the step.

- execute method. This method includes the logic for the step and performs the action. After PowerFlow evaluates all parameters and initialization settings and aligns the step with a worker process, PowerFlow examines the execute method.

Without these methods, PowerFlow will consider your step to be "incomplete" and will not execute the step.

The stepTemplate.py file includes these two methods and the syntax of some of the sub-methods you can use within the main methods:

Example Code: Subclass and Required Methods

For example, the "GetREST" step from the "Base Steps" Synchronization PowerPack contains the following code (the init and execute methods are bolded in the code below):

from ipaascommon.ipaas_exceptions import StepFailedException

from ipaascommon.ipaas_utils import str_to_bool

from base_steps_syncpack.steps.HTTPBaseStep import HTTPBaseStep

from base_steps_syncpack.util.request_params import (

chunk_size_param,

chunk_name_for_pos_in_url_param,

chunk_name_for_max_in_url_param,

chunk_name_for_total_param,

chunk_name_for_returned_param,

missing_return_total_param,

)

class GetREST(HTTPBaseStep):

def __init__(self):

super(GetREST, self).__init__()

self.friendly_name = "GetREST"

self.description = "Step facilitates REST GET interactions and will return the returned data dictionary and specified headers as data to the next step"

self.version = "1.0.0"

self.add_step_parameter_from_object(chunk_size_param)

self.add_step_parameter_from_object(chunk_name_for_pos_in_url_param)

self.add_step_parameter_from_object(chunk_name_for_max_in_url_param)

self.add_step_parameter_from_object(chunk_name_for_total_param)

self.add_step_parameter_from_object(chunk_name_for_returned_param)

self.add_step_parameter_from_object(missing_return_total_param)

self.enable_paging = True

self.method = "GET"

def execute(self):

if not self.chunk_size:

self.chunk_size = int(self.get_parameter(chunk_size_param.name))

self.chunk_name_for_pos_in_url = self.get_parameter(

chunk_name_for_pos_in_url_param.name

)

self.chunk_name_for_max_in_url = self.get_parameter(

chunk_name_for_max_in_url_param.name

)

self.chunk_name_for_total = self.get_parameter(chunk_name_for_total_param.name)

self.chunk_name_for_returned = self.get_parameter(

chunk_name_for_returned_param.name

)

if self.missing_return_total is None:

self.missing_return_total = str_to_bool(

self.get_parameter(missing_return_total_param.name)

)

try:

self.execute_request()

response = self.get_current_saved_data()

self.save_data_for_next_step(response)

except StepFailedException as err:

if len(err.args) > 1:

self.save_data_for_next_step(err.args[1])

raise StepFailedException(err.args[0])

Defining the Logic for the Step

Each step requires the init method and the execute method. Within those methods, you can specify parameters and logic for the step.

The init Method

Defining the Step Name, Description, and Version

From the init method, you can define the friendly name, the step description, and the step version. The following code examples contain the definitions for each value:

self.friendly_name = "friendly name of the step. This name appears in the user interface"

self.description = "Description of the step"

self.version = "version number"

In the "GetREST" step, the friendly name, description, and version number are defined like this:

def __init__(self):

self.friendly_name = "GetREST"

self.description = "Step facilitates REST interactions and will return the returned data dictionary and specified headers as data to the next step"

self.version = "1.0.0"

Defining Parameters for the Step

From the init method, you also define the input parameters for the step. The input parameters specify the values, variables, and configurations to use when executing the step. Parameters allow steps to accept arguments and allow steps to be re-used in multiple integrations. PowerFlow will examine the parameters for the step and enforce the parameters when the step is run.

Parameters display as editable fields on the Configuration pane for that step in the PowerFlow user interface.

For example, if you specify a parameter as required, and the user does not specify the required parameter when calling the step, PowerFlow will display an error message and will not execute the step.

To define a new parameter, use the self.new_step_parameter function:

self.new_step_parameter( name=<parameter_name>, description="<description>", sample_value="<sample_value>", default_value=<default_value>, required=<True/False>, param_type=parameter_types.<Number/String/Boolean>Parameter(), )

where:

- name. The name of the parameter. This value will be used to create a name:value tuple in the PowerFlow application file (in JSON).

- description. A description of the step parameter.

- sample_value. A sample value of the required data type or schema.

- default_value. If no value is specified for this parameter, use the default value. Can be any Python data structure. To prevent a default value, specify None.

- required. Specifies whether this parameter is required by the step. The possible values are True or False.

- param_type. Specifies the type of parameter. Options include Number, String, Boolean. This setting is optional.

The following is an example from the "Cache Save" step from the "Base Steps" SyncPack:

self.new_step_parameter( name=SAVE_KEY, description="The key for which to save this data with", sample_value="keyA", default_value=None, required=True, param_type=parameter_types.StringParameterShort(), )

The execute Method

From the execute method you can:

- Use Python logic and functions

- Retrieve the value of a parameter with self.get_parameter

- Retrieve data from a previous step in the application

- Save data for use by the next step in the application

- Define logging for the step

- Define exceptions for the step

For details on all the functions you can use in the execute method, see the chapter on the ipaascore.BaseStep class.

You can also define additional methods in the step. For examples of this and other examples of the logic in a step, see the any of the steps in the "Base Steps" SyncPack provided with PowerFlow.

Transferring Data Between Steps

An essential part of integrations is passing data between tasks. PowerFlow includes native support for saving and transferring Python objects between steps. The ipaascore.BaseStep class includes multiple functions for transferring data between steps.

Saving Data for the Next Step

The save_data_for_next_step function saves an object or other type of data and make the data available to another step. The object to be saved and made available must be able to be serialized with pickle. For more information about pickle, see https://docs.python.org/3/library/pickle.html.

For example:

save_data_for_next_step(<data_to_save>)

where: <data_to_save> is a variable that contains the data.

NOTE: The <data_to_save> object must be of a data type that can be pickled by Python: None, True and False, integers, long integers, floating point numbers, complex numbers, normal strings, unicode strings, tuples, lists, set, and dictionaries.

The following is an example of the save_data_for_next_step function:

save_data = {'key': 'value'}

self.save_data_for_next_step(save_data)

The PowerFlow application must then specify that the data from the current step should be passed to one or more subsequent step, using the output_to parameter. For more information, see Transferring Data Between Steps.

Retrieving Data from a Previous Step

The ipaascore.BaseStep class includes multiple functions that retrieve data from a previous step:

To retrieve data from a previous step:

- That previous step must save the data with the save_data_for_next_step function.

- The PowerFlow application must specify that the data from the previous step should be passed to the current step using the output_to parameter.

get_data_from_step_by_name

The get_data_from_step_by_name function retrieves data saved by a previous step.

Although the get_data_from_step_by_name function is simple to use, it does not allow you to write a generic, reusable step, because the step name will be hard-coded in the function. The join_previous_step_data or get_data_from_step_by_order functions allow you to create a more generic, reusable step.

For example:

get_data_from_step_by_name('<step_name>')

where <step_name> is the name of a previous step in the PowerFlow application. Use the system name of the step, not the "friendly name" with spaces that appears in the PowerFlow user interface.

The following is an example of the get_data_from_step_by_name function:

em7_data = self.get_data_from_step_by_name('FetchDevicesFromEM7')

snow_data = self.get_data_from_step_by_name('FetchDevicesFromSnow')

get_data_from_step_by_order

The get_data_from_step_by_order function retrieves data from a step based on the position of the step in the application.

For example:

get_data_from_step_by_order(<position>)

where: <position> is the position of the step (the order that the step was run) in the PowerFlow application. Position starts at 0 (zero).

For example:

- Suppose your application has four steps: stepA, stepB, stepC, and stepD

- Suppose stepA was run first (position 0) and includes the parameter output_to:[stepD]

- Suppose stepB was run second (position 1) and includes the parameter output_to:[stepD]

- Suppose stepC was run third (position 2) and includes the parameter output_to:[stepD]

- Suppose stepD was run fourth

If the current step is stepD, and stepD needs the data from stepC, you could use the following:

data_from_stepC = self.get_data_from_step_by_order(2)

join_previous_step_data

The join_previous_step_data function is the easiest and most generic way of retrieving data from one or more previous steps in the application.

If you are expecting similar data from multiple steps, or expecting data from only a single step, the join_previous_step_data function is the best choice.

The join_previous_step_data function gathers all data from all steps that included the save_data_for_next_step function and also include the output_to parameter in the application. By default, this function returns the joined set of all data that is passed to the current step. You can also specify a list of previous steps from which to join data.

The retrieved data must be of the same type. The data is then combined into a list in a dictionary. If the data types are not the same, then the function will raise an exception.

For example:

join_previous_step_data(<step_name>)

where <step_name> is an optional argument that specifies the steps. For example, if you wanted to join only the data from stepA and stepD, you could specify the following:

self.join_previous_step_data(["stepA", "stepD"]),

The following is an example of the join_previous_step_data function in the "SaveToCache" step (included in each PowerFlow system):

def execute(self):

data_from = self.get_parameter(DATA_FROM_PARAM, {})

if data_from:

data_to_cache = self.join_previous_step_data(data_from)

else:

data_to_cache = self.join_previous_step_data()

...

Step Parameters

Steps accept arguments, called input parameters. Users can configure these parameters to specify the values, variables, and configurations to use when executing.

Base Parameters Available in All Steps

The BaseStep class has a few base parameters that are automatically inherited by all steps and cannot be overwritten. You do not need to define these parameters before using them in steps:

-

name. The application-unique name for this step. That parameter can be used by other steps to refer to a step.

-

file. The name of the file that will be executed by the step. For example, you could write step logic in a single file, but use that step logic with different applications and use different names for the step in each application.

-

output_to. A list indicating that the data retrieved from this step should be output to another step. Setting this parameter links the steps, and the subsequent step will be able to retrieve data from the current step. The format is:

"output_to":["stepA", "stepB"]

Defining a Parameter

From the init method, you can define one ore more input parameters for the step. The PowerFlow system will examine the parameters and enforce the parameters when the step is run.

For example, if you specify a parameter as required, and the user does not specify the required parameter when calling the step, the PowerFlow system will display an error message and will not execute the step.

To define a parameter, use the new_step_parameter function.

self.new_step_parameter( name=<parameter_name>, description="<description>", sample_value="<sample_value>", default_value=<default_value>, required=<True/False>, param_type=parameter_types.<Number/String/Boolean>Parameter(), )

where:

- name. The name of the parameter. This value will be used to create a name:value tuple in the PowerFlow application file (in JSON).

- description. A description of the step parameter.

- sample_value. A sample value of the required data type or schema.

- default_value. If no value is specified for this parameter, use the default value. Can be any Python data structure. To prevent a default value, specify None.

- required. Specifies whether this parameter is required by the step. The possible values are True or False.

- param_type. Specifies the type of parameter. Options include Number, String, Boolean. This setting is optional.

The following is an example from the GetREST step:

self.new_step_parameter(name=PREFIX_URL, description="used with relative_url to create the full URL.", sample_value="http://10.2.11.253", default_value=None, required=True)

Retrieving Parameter Values

To retrieve the latest value of a parameter, use the get_parameter function.

get_parameter("<param_name>", <lookup_data>=None)

where:

- <param_name>. The name of the parameter that you want to retrieve the value for.

- <lookup_data>. An optional dictionary that can provide a reference for additional variable substitutions.

For example, suppose we defined this parameter in the step named "GETgoogle":

self.new_step_parameter(name=prefix_url, description="used with relative_url to create the full URL.", sample_value="http://10.2.11.253", default_value=None, required=True)

Suppose in the PowerFlow application that calls "GETgoogle", we specified:

"steps": [

{

"file": "GetREST",

"name": "GETgoogle",

"output_to": ["next_step"],

"prefix_url": "http://google.com"

}

],

Suppose we use the get_parameter function in the step "GETgoogle" to retrieve the value of the "prefix_url" parameter:

build_url_1 = self.get_parameter("prefix_url")

The value of build_url_1 would be "http://google.com".

Variable Substitution in Parameters

PowerFlow lets you define variables so that input parameters can be populated dynamically.

To include a variable in a parameter, use the following syntax:

${<exampleVariable>}

PowerFlow includes the following types of variables that you can use in parameters:

-

${object_from_previous_step}. PowerFlow will search the data from the previous steps for object_from_previous_step. If found, PowerFlow substitutes the value of the object for the variable.

-

${config.<exampleVariable>}. Configuration variables are defined in a stand-alone file that lives on PowerFlow and can be accessed by all applications and their steps. Including the config. prefix with a variable tells PowerFlow to look in a configuration file to resolve the variable. If you want to re-use the same settings (like hostname and credentials) between applications, define configuration variables.

-

${appvar.<exampleVariable>}. Application variables are defined in the PowerFlow application. These variables can be accessed only by steps in the application. Including the appvar. prefix with a variable tells PowerFlow to look in the application to resolve the variable.

-

${stepfunc.<exampleFunctionargs>}. The variable value will be the output from the user-defined function, specified in exampleFunction, with the arguments specified in args. The exampleFunction must exist in the current step. Additional parameters can be specified as args with a space delimiter. You can also specify additional variable substitution values as the arguments. This allows you to dynamically set the value of a variable using a proprietary function, with dynamically generated arguments. For example:

"param": "${step_func.add_numbers 1 2}"

will call a function (defined in the current step) called “add_numbers” and pass it the arguments "1" and "2". The value of "param" will be "3".

For details on defining configuration variables and application variables, see Defining Variables for an Application.

Defining Logging for the Step

PowerFlow includes a logger for steps. The BaseStep class initializes the logger, so it is ready for use by each step.

To define logging in a step, use the following syntax:

self.logger.<logging_level> ("<log_message>")

where:

- <logging_level> is one of the following Python logging levels:

- critical

- error

- info

- warning

- <log_message> is the message that will appear in the step log.

For example :

self.logger.info("informational message")

Raising Exceptions

PowerFlow natively handles exceptions raised from custom steps. You can include a user-defined exception or any standard Python exception.

If an exception is raised at runtime, the step will immediately be marked as a failure and be discarded.

To view the exception and the complete stack trace, use the steps in Viewing Logs.

Uploading Your Step

When you create a new step or edit an existing step, you must upload the step to the PowerFlow system.

There are two ways to upload a step to the PowerFlow system:

Uploading a Step with iscli

The PowerFlow system includes a command-line tool called iscli. When you install PowerFlow, iscli is automatically installed.

To upload a step to PowerFlow using iscli:

-

Either go to the console of PowerFlow or use SSH to access the server.

-

Log in as isadmin with the appropriate password.

-

Enter the following at the command line:

iscli -u -s -f <path_and_name_of_step_file>.py -H <hostname_or_IP_address_of_powerflow> -P <port_number_of_http_on_powerflow> -U <user_name> -p password

where:

- <path_and_name_of_step_file> is the full pathname for the step.

- <hostname_or_IP_address_of_powerflow> is the hostname or IP address of PowerFlow.

- <port_number_of_http_on_powerflow> is the port number to access PowerFlow. The default value is 443.

- <user_name> is the user name you use to log in to PowerFlow.

- password is the password you use to log in to PowerFlow.

Uploading a Step with the API

PowerFlow includes an API that you can use to upload steps.

To upload a step with the API POST /steps:

POST /steps

{

"name": "name_of_step",

"data": "string"

}

where data is all the information included in the step.

Validating Your Step

After uploading a step, you can use the API POST /steps/run to run the step individually without running an application. This allows you to validate that the step works as designed.

To run a step from the IS API:

POST /steps/run

{

"name": "name_of_step",

all other data from the .json file for the step

}

After the POST request is made, PowerFlow will dispatch the step to a remote worker process for execution. By default, the POST request will wait five seconds for the step to complete. To override the default wait period, you can specify wait time as a parameter in the POST request. For example, to specify that the wait time should be 10 seconds :

POST /steps/run?wait=10

{

"name": "example_step",

}

If the step completes within the wait time, PowerFlow returns a 200 return code, logs, output, and the result of the step.

If the step does not complete within the wait time, PowerFlow returns a task ID. You can use this task ID to view the logs for the step.

The API returns one of the following codes:

- 200. Step executed and completed within the timeout period.

- 202. Step executed but did not complete with timeout period or user did not specify wait. Returned data includes task to query for the status of the step

- 400. Required parameter for the step is missing.

- 404. Step not found.

- 500. Internal error. Database connection might be lost.

Viewing Logs

After running a step, you can view the log information for a step. Log information for a step is saved for the duration of the result_expires setting in the PowerFlow system. The result_expires setting is defined in the file opt/iservices/scripts/docker-compose.yml. The default value for log expiration is 24 hours.

To view the log information for a step before running an integration, you can use the API POST /steps run to run the step individually without running an application. You can then use the information in step 3-6 below to view step logs.

To view the log information for a step:

-

Run a PowerFlow application.

-

Using an API tool like Postman or cURL, use the API GET /applications/{appName}/logs:

GET <URL_for_PowerFlow>/api/v1/applications/<application_name>/logs

-

You should see something like this:

{

"app_name": "example_integration",

"app_vars": {},

"href": "/api/v1/tasks/isapp-af7d3824-c147-4d44-b72a-72d9eae2ce9f",

"id": "isapp-af7d3824-c147-4d44-b72a-72d9eae2ce9f",

"start_time": 1527429570,

"state": "SUCCESS",

"steps": [

{

"href": "/api/v1/tasks/2df5e7d5-c680-4d9d-860c-e1ceccd1b189",

"id": "2df5e7d5-c680-4d9d-860c-e1ceccd1b189",

"name": "First EM7 Query",

"state": "SUCCESS",

"traceback": null

},

{

"href": "/api/v1/tasks/49e1212b-b512-4fa7-b099-ea6b27acf128",

"id": "49e1212b-b512-4fa7-b099-ea6b27acf128",

"name": "second EM7 Query",

"state": "SUCCESS",

"traceback": null

}

],

"triggered_by": [

{

"application_id": "isapp-af7d3824-c147-4d44-b72a-72d9eae2ce9f",

"triggered_by": "USER"

}

]

}

-

In the "steps" section, notice the lines that start with href and id. You can use these lines to view the logs for the application and the steps.

-

To use the href_value to get details about a step, use an API tool like Postman or cURL and then use the API GET /steps{step_name}:

GET <URL_for_PowerFlow>/<href_value>

where <href_value> is the href value you can copy from the log file for the application. The href value is another version of the step name.

To view logs for subsequent runs of the application, you can include the href specified in the last_run field.

-

To use the task id value to views details about a step, use an API tool like Postman or cURL and then use the API GET /tasks/{task_ID}:

GET <URL_for_PowerFlow>/<task_id>

where <task_id> is the ID value you can copy from the log file for the application. The task ID specifies the latest execution of the step.

After you find the href and task ID for a step, you can use those values to retrieve the most recent logs and status of the step.

Default Steps

The "Base Steps" SyncPack contains a default set of steps that are used in a variety of different SyncPacks. You must install and activate this SyncPack before you can run any of the other SyncPacks.

This SyncPack is included with the most recent release of the PowerFlow Platform. You can also download newer versions of this SyncPack, if available, from the PowerPacks page of the ScienceLogic Support Site at https://support.sciencelogic.com/s/.

Starting with version 1.5.0 of the "Base Steps" SyncPack, the "QueryREST" has been deprecated.

For more information about the latest releases of this SyncPack, see the SL1 PowerFlow Synchronization PowerPack Release Notes.

Contents of the SyncPack

This SyncPack contains the "Template App" PowerFlow application. You can use the "Template App" application as a template for building new PowerFlow applications.

Click the down arrow icon () next to the application name to view the steps and workflow for that application. Click the application name to go to the Application detail page.

This SyncPack includes the following steps, which you can use in new and existing applications:

- Cache Delete. Deletes data from the cache.

- Cache Read. Reads data from the cache using the save_key option and sets the returned data for the next step.

- Cache Save. Takes any input data and saves it to a cache with the key specified in the save_key parameter. The key the data was saved with is saved for the next step.

- DeleteREST. Facilitates REST DELETE interactions, returns the returned data dictionary and specified headers as data for the next step.

- Deprecated: Direct Cache Read. Attempts to read from cache without using any N1QL or indexes.

- Deprecated: Query REST. Facilitates REST interactions, returns the returned data dictionary and specified headers as data for the next step.

- GetREST. Facilitates REST GET interactions, returns the returned data dictionary and specified headers as data for the next step.

- Jinja Template Data Render. Transforms the incoming data using a defined Jinja2 template.

- MS-SQL Describe. Executes a Microsoft SQL SP_HELP Statement.

- MS-SQL Insert. Executes a Microsoft SQL INSERT Statement.

- MS-SQL Select. Executes a Microsoft SQL SELECT statement.

- MySQL Delete. Executes a MySQL DELETE statement.

- MySQL Describe. Executes a MySQL DESC statement.

- MySQL Insert. Executes a MySQL INSERT statement.

- MySQL Select. Executes a MySQL DELETE statement.

- PostREST. Facilitates REST POST interactions, returns the returned data dictionary and specified headers as data for the next step.

- PutREST. Facilitates REST PUT interactions, returns the returned data dictionary and specified headers as data for the next step.

- Query GraphQL. Queries a remote GraphQL endpoint and retrieves the data.

- QueryRest: BearerAuth. Makes a RESTful request to an endpoint with BearerAuth.

- QueryRest: OAuth. Makes a RESTful request to an endpoint with OAuth.

- Run a command through an SSH tunnel. SSHes into the provided system and executes a command.

- Trigger Application. Lets you trigger any number of the same application based on application variables or input data. If this step is set to have an output_to setting, the step will automatically wait until all of its child triggered applications are completed. If output_to is not set, the step will simply trigger the child applications and then report success or failure. If this step is not set with an output_to value, you can manually enable waiting for child_failure with the wait_for_child_completion parameter. For more information, see Creating an Application that Uses a Trigger Application Operator.

To view the code for a step, select a SyncPack from the SyncPacks page, click the tab, and select the step you want to view.